diff --git a/docs/chapter1/img/1.1.png b/docs/chapter1/img/1.1.png

deleted file mode 100644

index e36284a..0000000

Binary files a/docs/chapter1/img/1.1.png and /dev/null differ

diff --git a/docs/chapter1/img/1.10.png b/docs/chapter1/img/1.10.png

deleted file mode 100644

index 532438e..0000000

Binary files a/docs/chapter1/img/1.10.png and /dev/null differ

diff --git a/docs/chapter1/img/1.11.png b/docs/chapter1/img/1.11.png

deleted file mode 100644

index 0c2375a..0000000

Binary files a/docs/chapter1/img/1.11.png and /dev/null differ

diff --git a/docs/chapter1/img/1.12.png b/docs/chapter1/img/1.12.png

deleted file mode 100644

index 814dc76..0000000

Binary files a/docs/chapter1/img/1.12.png and /dev/null differ

diff --git a/docs/chapter1/img/1.13.png b/docs/chapter1/img/1.13.png

deleted file mode 100644

index 718d85d..0000000

Binary files a/docs/chapter1/img/1.13.png and /dev/null differ

diff --git a/docs/chapter1/img/1.14.png b/docs/chapter1/img/1.14.png

deleted file mode 100644

index c3b2415..0000000

Binary files a/docs/chapter1/img/1.14.png and /dev/null differ

diff --git a/docs/chapter1/img/1.14a.png b/docs/chapter1/img/1.14a.png

deleted file mode 100644

index 31000c5..0000000

Binary files a/docs/chapter1/img/1.14a.png and /dev/null differ

diff --git a/docs/chapter1/img/1.14b.png b/docs/chapter1/img/1.14b.png

deleted file mode 100644

index 279ab06..0000000

Binary files a/docs/chapter1/img/1.14b.png and /dev/null differ

diff --git a/docs/chapter1/img/1.15.png b/docs/chapter1/img/1.15.png

deleted file mode 100644

index 3a66f69..0000000

Binary files a/docs/chapter1/img/1.15.png and /dev/null differ

diff --git a/docs/chapter1/img/1.15a.png b/docs/chapter1/img/1.15a.png

deleted file mode 100644

index b63571a..0000000

Binary files a/docs/chapter1/img/1.15a.png and /dev/null differ

diff --git a/docs/chapter1/img/1.15b.png b/docs/chapter1/img/1.15b.png

deleted file mode 100644

index 30fa0a2..0000000

Binary files a/docs/chapter1/img/1.15b.png and /dev/null differ

diff --git a/docs/chapter1/img/1.16.png b/docs/chapter1/img/1.16.png

deleted file mode 100644

index ffcd32a..0000000

Binary files a/docs/chapter1/img/1.16.png and /dev/null differ

diff --git a/docs/chapter1/img/1.17.png b/docs/chapter1/img/1.17.png

deleted file mode 100644

index 4520633..0000000

Binary files a/docs/chapter1/img/1.17.png and /dev/null differ

diff --git a/docs/chapter1/img/1.18.png b/docs/chapter1/img/1.18.png

deleted file mode 100644

index 62c7ff6..0000000

Binary files a/docs/chapter1/img/1.18.png and /dev/null differ

diff --git a/docs/chapter1/img/1.19.png b/docs/chapter1/img/1.19.png

deleted file mode 100644

index 7bb1c7b..0000000

Binary files a/docs/chapter1/img/1.19.png and /dev/null differ

diff --git a/docs/chapter1/img/1.2.png b/docs/chapter1/img/1.2.png

deleted file mode 100644

index 8214682..0000000

Binary files a/docs/chapter1/img/1.2.png and /dev/null differ

diff --git a/docs/chapter1/img/1.20.png b/docs/chapter1/img/1.20.png

deleted file mode 100644

index a5ebccb..0000000

Binary files a/docs/chapter1/img/1.20.png and /dev/null differ

diff --git a/docs/chapter1/img/1.21.png b/docs/chapter1/img/1.21.png

deleted file mode 100644

index e5f0f0c..0000000

Binary files a/docs/chapter1/img/1.21.png and /dev/null differ

diff --git a/docs/chapter1/img/1.22.png b/docs/chapter1/img/1.22.png

deleted file mode 100644

index d646ee1..0000000

Binary files a/docs/chapter1/img/1.22.png and /dev/null differ

diff --git a/docs/chapter1/img/1.23.png b/docs/chapter1/img/1.23.png

deleted file mode 100644

index 94f82f8..0000000

Binary files a/docs/chapter1/img/1.23.png and /dev/null differ

diff --git a/docs/chapter1/img/1.24.png b/docs/chapter1/img/1.24.png

deleted file mode 100644

index aa12547..0000000

Binary files a/docs/chapter1/img/1.24.png and /dev/null differ

diff --git a/docs/chapter1/img/1.25.png b/docs/chapter1/img/1.25.png

deleted file mode 100644

index 48a9268..0000000

Binary files a/docs/chapter1/img/1.25.png and /dev/null differ

diff --git a/docs/chapter1/img/1.26.png b/docs/chapter1/img/1.26.png

deleted file mode 100644

index e2584b3..0000000

Binary files a/docs/chapter1/img/1.26.png and /dev/null differ

diff --git a/docs/chapter1/img/1.27.png b/docs/chapter1/img/1.27.png

deleted file mode 100644

index ba7cd96..0000000

Binary files a/docs/chapter1/img/1.27.png and /dev/null differ

diff --git a/docs/chapter1/img/1.28.png b/docs/chapter1/img/1.28.png

deleted file mode 100644

index 346c89c..0000000

Binary files a/docs/chapter1/img/1.28.png and /dev/null differ

diff --git a/docs/chapter1/img/1.29.png b/docs/chapter1/img/1.29.png

deleted file mode 100644

index a46b39f..0000000

Binary files a/docs/chapter1/img/1.29.png and /dev/null differ

diff --git a/docs/chapter1/img/1.3.png b/docs/chapter1/img/1.3.png

deleted file mode 100644

index e737bc6..0000000

Binary files a/docs/chapter1/img/1.3.png and /dev/null differ

diff --git a/docs/chapter1/img/1.30.png b/docs/chapter1/img/1.30.png

deleted file mode 100644

index a00d6b0..0000000

Binary files a/docs/chapter1/img/1.30.png and /dev/null differ

diff --git a/docs/chapter1/img/1.31.png b/docs/chapter1/img/1.31.png

deleted file mode 100644

index 8819bb5..0000000

Binary files a/docs/chapter1/img/1.31.png and /dev/null differ

diff --git a/docs/chapter1/img/1.32.png b/docs/chapter1/img/1.32.png

deleted file mode 100644

index fa19878..0000000

Binary files a/docs/chapter1/img/1.32.png and /dev/null differ

diff --git a/docs/chapter1/img/1.33.png b/docs/chapter1/img/1.33.png

deleted file mode 100644

index 8f72a7a..0000000

Binary files a/docs/chapter1/img/1.33.png and /dev/null differ

diff --git a/docs/chapter1/img/1.34.png b/docs/chapter1/img/1.34.png

deleted file mode 100644

index 579d9da..0000000

Binary files a/docs/chapter1/img/1.34.png and /dev/null differ

diff --git a/docs/chapter1/img/1.35.png b/docs/chapter1/img/1.35.png

deleted file mode 100644

index 3b3d848..0000000

Binary files a/docs/chapter1/img/1.35.png and /dev/null differ

diff --git a/docs/chapter1/img/1.36.png b/docs/chapter1/img/1.36.png

deleted file mode 100644

index 3bc31ac..0000000

Binary files a/docs/chapter1/img/1.36.png and /dev/null differ

diff --git a/docs/chapter1/img/1.37.png b/docs/chapter1/img/1.37.png

deleted file mode 100644

index 5f94a01..0000000

Binary files a/docs/chapter1/img/1.37.png and /dev/null differ

diff --git a/docs/chapter1/img/1.38.png b/docs/chapter1/img/1.38.png

deleted file mode 100644

index 03951c2..0000000

Binary files a/docs/chapter1/img/1.38.png and /dev/null differ

diff --git a/docs/chapter1/img/1.39.png b/docs/chapter1/img/1.39.png

deleted file mode 100644

index 6f52a11..0000000

Binary files a/docs/chapter1/img/1.39.png and /dev/null differ

diff --git a/docs/chapter1/img/1.4.png b/docs/chapter1/img/1.4.png

deleted file mode 100644

index 3eb81f1..0000000

Binary files a/docs/chapter1/img/1.4.png and /dev/null differ

diff --git a/docs/chapter1/img/1.40.png b/docs/chapter1/img/1.40.png

deleted file mode 100644

index 373dc02..0000000

Binary files a/docs/chapter1/img/1.40.png and /dev/null differ

diff --git a/docs/chapter1/img/1.41.png b/docs/chapter1/img/1.41.png

deleted file mode 100644

index b3db399..0000000

Binary files a/docs/chapter1/img/1.41.png and /dev/null differ

diff --git a/docs/chapter1/img/1.42.png b/docs/chapter1/img/1.42.png

deleted file mode 100644

index 9aa5c7d..0000000

Binary files a/docs/chapter1/img/1.42.png and /dev/null differ

diff --git a/docs/chapter1/img/1.43.png b/docs/chapter1/img/1.43.png

deleted file mode 100644

index b720d49..0000000

Binary files a/docs/chapter1/img/1.43.png and /dev/null differ

diff --git a/docs/chapter1/img/1.44.png b/docs/chapter1/img/1.44.png

deleted file mode 100644

index fafc170..0000000

Binary files a/docs/chapter1/img/1.44.png and /dev/null differ

diff --git a/docs/chapter1/img/1.45.png b/docs/chapter1/img/1.45.png

deleted file mode 100644

index e36284a..0000000

Binary files a/docs/chapter1/img/1.45.png and /dev/null differ

diff --git a/docs/chapter1/img/1.46.png b/docs/chapter1/img/1.46.png

deleted file mode 100644

index abf2552..0000000

Binary files a/docs/chapter1/img/1.46.png and /dev/null differ

diff --git a/docs/chapter1/img/1.47.png b/docs/chapter1/img/1.47.png

deleted file mode 100644

index bce609e..0000000

Binary files a/docs/chapter1/img/1.47.png and /dev/null differ

diff --git a/docs/chapter1/img/1.5.png b/docs/chapter1/img/1.5.png

deleted file mode 100644

index 5fb5f56..0000000

Binary files a/docs/chapter1/img/1.5.png and /dev/null differ

diff --git a/docs/chapter1/img/1.6.png b/docs/chapter1/img/1.6.png

deleted file mode 100644

index 20433e1..0000000

Binary files a/docs/chapter1/img/1.6.png and /dev/null differ

diff --git a/docs/chapter1/img/1.7.png b/docs/chapter1/img/1.7.png

deleted file mode 100644

index df13685..0000000

Binary files a/docs/chapter1/img/1.7.png and /dev/null differ

diff --git a/docs/chapter1/img/1.8.png b/docs/chapter1/img/1.8.png

deleted file mode 100644

index 3defb23..0000000

Binary files a/docs/chapter1/img/1.8.png and /dev/null differ

diff --git a/docs/chapter1/img/1.9.png b/docs/chapter1/img/1.9.png

deleted file mode 100644

index 3892956..0000000

Binary files a/docs/chapter1/img/1.9.png and /dev/null differ

diff --git a/docs/chapter1/img/learning.png b/docs/chapter1/img/learning.png

deleted file mode 100644

index d3cb87f..0000000

Binary files a/docs/chapter1/img/learning.png and /dev/null differ

diff --git a/docs/chapter1/img/planning.png b/docs/chapter1/img/planning.png

deleted file mode 100644

index c620811..0000000

Binary files a/docs/chapter1/img/planning.png and /dev/null differ

diff --git a/docs/chapter2/chapter2.md b/docs/chapter2/chapter2.md

index 77a541c..54a893a 100644

--- a/docs/chapter2/chapter2.md

+++ b/docs/chapter2/chapter2.md

@@ -667,7 +667,6 @@ $$

这里再来看一下第二个步骤————策略改进,看我们是如何改进策略的。得到状态价值函数后,我们就可以通过奖励函数以及状态转移函数来计算 Q 函数:

$$

Q_{\pi_{i}}(s, a)=R(s, a)+\gamma \sum_{s^{\prime} \in S} p\left(s^{\prime} \mid s, a\right) V_{\pi_{i}}\left(s^{\prime}\right)

-

$$

对于每个状态,策略改进会得到它的新一轮的策略,对于每个状态,我们取使它得到最大值的动作,即

diff --git a/docs/chapter3/chapter3.md b/docs/chapter3/chapter3.md

index 9cce2a0..be74d4a 100644

--- a/docs/chapter3/chapter3.md

+++ b/docs/chapter3/chapter3.md

@@ -357,7 +357,6 @@ $$

$$

V\left(s_{t}\right) \leftarrow V\left(s_{t}\right)+\alpha\left(G_{t}^{n}-V\left(s_{t}\right)\right)

-

$$

### 3.3.3 动态规划方法、蒙特卡洛方法以及时序差分方法的自举和采样

@@ -382,7 +381,6 @@ $$

如图 3.20 所示,蒙特卡洛方法在当前状态下,采取一条支路,在这条路径上进行更新,更新这条路径上的所有状态,即

$$

-

V\left(s_{t}\right) \leftarrow V\left(s_{t}\right)+\alpha\left(G_{t}-V\left(s_{t}\right)\right)

$$

diff --git a/docs/chapter4/chapter4.md b/docs/chapter4/chapter4.md

index b4dfac1..d303f1b 100644

--- a/docs/chapter4/chapter4.md

+++ b/docs/chapter4/chapter4.md

@@ -98,7 +98,6 @@ $$

如式(4.2)所示,我们对 $\tau$ 进行求和,把 $R(\tau)$ 和 $\log p_{\theta}(\tau)$ 这两项使用 $p_{\theta}(\tau)$ 进行加权, 既然使用 $p_{\theta}(\tau)$ 进行加权 ,它们就可以被写成期望的形式。也就是我们从 $p_{\theta}(\tau)$ 这个分布里面采样 $\tau$ , 去计算 $R(\tau)$ 乘 $\nabla\log p_{\theta}(\tau)$,对所有可能的 $\tau$ 进行求和,就是期望的值(expected value)。

$$

-

\begin{aligned}

\nabla \bar{R}_{\theta}&=\sum_{\tau} R(\tau) \nabla p_{\theta}(\tau)\\&=\sum_{\tau} R(\tau) p_{\theta}(\tau) \frac{\nabla p_{\theta}(\tau)}{p_{\theta}(\tau)} \\&=

\sum_{\tau} R(\tau) p_{\theta}(\tau) \nabla \log p_{\theta}(\tau) \\

@@ -114,7 +113,6 @@ $$

$$

$\nabla \log p_{\theta}(\tau)$ 的具体计算过程可写为

$$

-

\begin{aligned}

\nabla \log p_{\theta}(\tau) &= \nabla \left(\log p(s_1)+\sum_{t=1}^{T}\log p_{\theta}(a_t|s_t)+ \sum_{t=1}^{T}\log p(s_{t+1}|s_t,a_t) \right) \\

&= \nabla \log p(s_1)+ \nabla \sum_{t=1}^{T}\log p_{\theta}(a_t|s_t)+ \nabla \sum_{t=1}^{T}\log p(s_{t+1}|s_t,a_t) \\

@@ -125,7 +123,6 @@ $$

注意, $p(s_1)$ 和 $p(s_{t+1}|s_t,a_t)$ 来自环境,$p_\theta(a_t|s_t)$ 来自智能体。$p(s_1)$ 和 $p(s_{t+1}|s_t,a_t)$ 由环境决定,与 $\theta$ 无关,因此 $\nabla \log p(s_1)=0$ ,$\nabla \sum_{t=1}^{T}\log p(s_{t+1}|s_t,a_t)=0$。

$$

-

\begin{aligned}

\nabla \bar{R}_{\theta}&=\sum_{\tau} R(\tau) \nabla p_{\theta}(\tau)\\&=\sum_{\tau} R(\tau) p_{\theta}(\tau) \frac{\nabla p_{\theta}(\tau)}{p_{\theta}(\tau)} \\&=

\sum_{\tau} R(\tau) p_{\theta}(\tau) \nabla \log p_{\theta}(\tau) \\

@@ -169,13 +166,11 @@ $$

我们在解决分类问题的时候,目标函数就是最大化或最小化的对象,因为我们现在是最大化似然(likelihood),所以其实是最大化,我们要最大化

$$

-

\frac{1}{N} \sum_{n=1}^{N} \sum_{t=1}^{T_{n}} \log p_{\theta}\left(a_{t}^{n} \mid s_{t}^{n}\right)

$$

我们可在 PyTorch 里调用现成的函数来自动计算损失函数,并且把梯度计算出来。这是一般的分类问题,强化学习与分类问题唯一不同的地方是损失前面乘一个权重————整场游戏得到的总奖励 $R(\tau)$,而不是在状态$s$采取动作$a$的时候得到的奖励,即

$$

-

\frac{1}{N} \sum_{n=1}^{N} \sum_{t=1}^{T_{n}} R\left(\tau^{n}\right) \log p_{\theta}\left(a_{t}^{n} \mid s_{t}^{n}\right) \tag{4.5}

$$

@@ -220,7 +215,6 @@ $$

为了解决奖励总是正的的问题,我们可以把奖励减 $b$,即

$$

-

\nabla \bar{R}_{\theta} \approx \frac{1}{N} \sum_{n=1}^{N} \sum_{t=1}^{T_{n}}\left(R\left(\tau^{n}\right)-b\right) \nabla \log p_{\theta}\left(a_{t}^{n} \mid s_{t}^{n}\right)

$$

@@ -257,7 +251,6 @@ $$

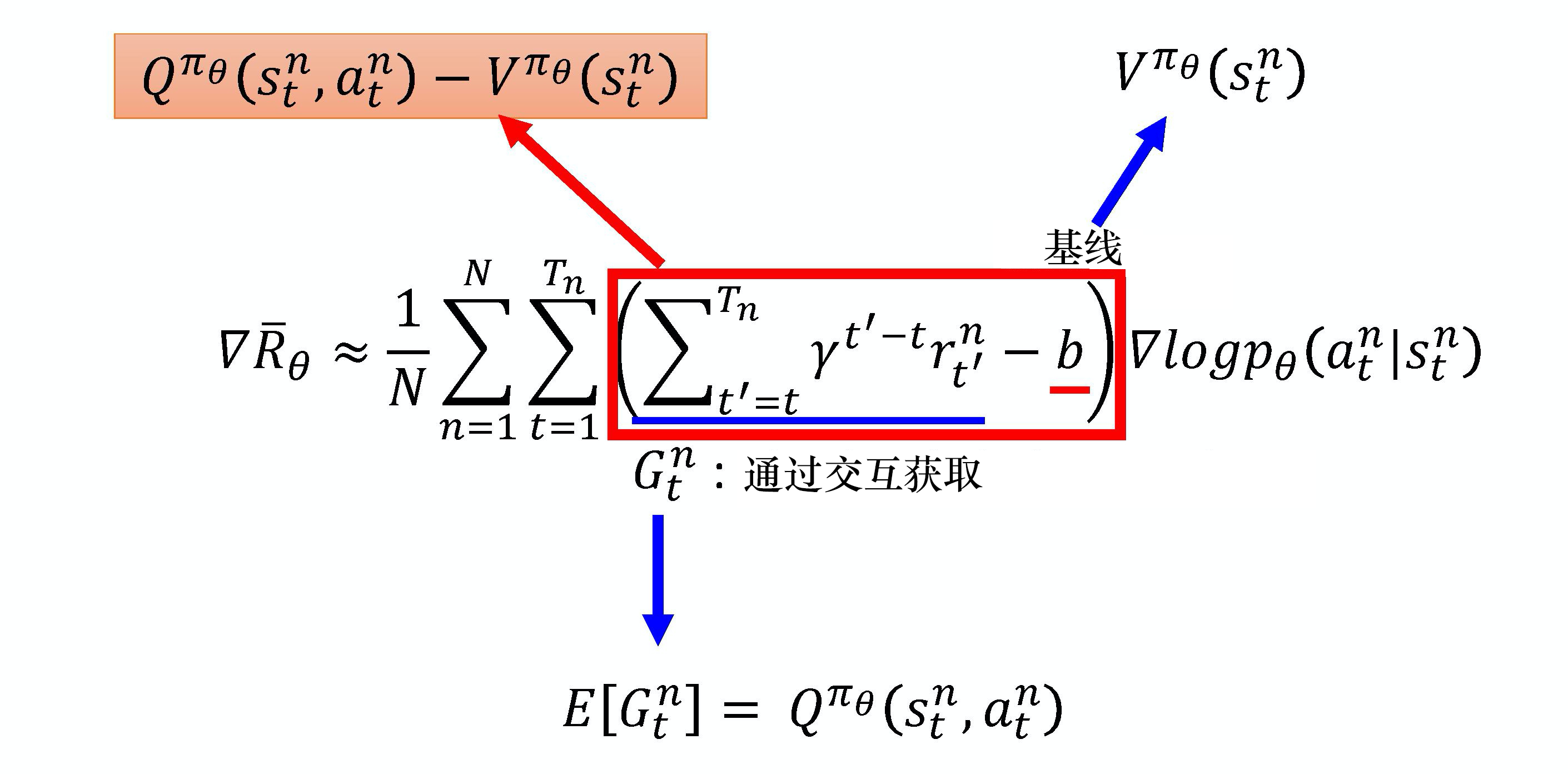

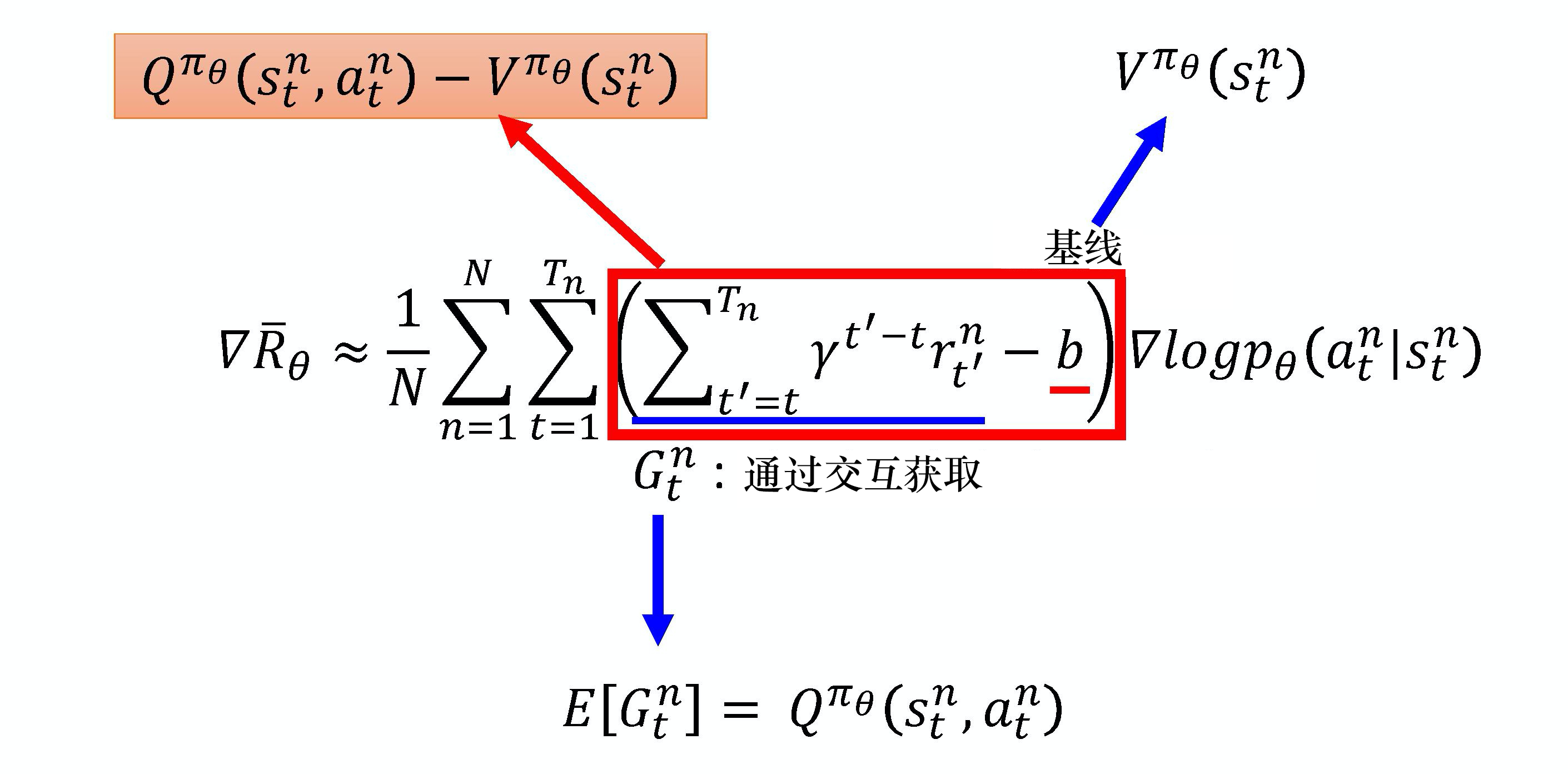

接下来更进一步,我们把未来的奖励做一个折扣,即

$$

-

\nabla \bar{R}_{\theta} \approx \frac{1}{N} \sum_{n=1}^{N} \sum_{t=1}^{T_{n}}\left(\sum_{t^{\prime}=t}^{T_{n}} \gamma^{t^{\prime}-t} r_{t^{\prime}}^{n}-b\right) \nabla \log p_{\theta}\left(a_{t}^{n} \mid s_{t}^{n}\right)

$$

为什么要把未来的奖励做一个折扣呢?因为虽然在某一时刻,执行某一个动作,会影响接下来所有的结果(有可能在某一时刻执行的动作,接下来得到的奖励都是这个动作的功劳),但在一般的情况下,时间拖得越长,该动作的影响力就越小。 比如在第2个时刻执行某一个动作, 那在第3个时刻得到的奖励可能是在第2个时刻执行某个动作的功劳,但是在第 100 个时刻之后又得到奖励,那可能就不是在第2个时刻执行某一个动作的功劳。实际上,我们会在$R$前面乘一个折扣因子 $\gamma$($\gamma \in [0,1] $ ,一般会设为 0.9 或 0.99),如果 $\gamma = 0$,这表示我们只关心即时奖励;如果$\gamma = 1$,这表示未来奖励等同于即时奖励。时刻 $t'$ 越大,它前面就多次乘 $\gamma$,就代表现在在某一个状态 $s_t$, 执行某一个动作 $a_t$ 的时候,它真正的分数是执行这个动作之后所有奖励的总和,而且还要乘 $\gamma$。例如,假设游戏有两个回合,我们在游戏的第二回合的某一个 $s_t$ 执行 $a_t$ 得到 +1 分,在 $s_{t+1}$ 执行 $a_{t+1}$ 得到 +3 分,在 $s_{t+2}$ 执行 $a_{t+2}$ 得到 $-$5 分,第二回合结束。$a_t$ 的分数应该是

@@ -284,13 +277,11 @@ $$

我们介绍一下策略梯度中最简单的也是最经典的一个算法**REINFORCE**。REINFORCE 用的是回合更新的方式,它在代码上的处理上是先获取每个步骤的奖励,然后计算每个步骤的未来总奖励 $G_t$,将每个 $G_t$ 代入

$$

-

\nabla \bar{R}_{\theta} \approx \frac{1}{N} \sum_{n=1}^{N} \sum_{t=1}^{T_{n}} G_{t}^{n} \nabla \log \pi_{\theta}\left(a_{t}^{n} \mid s_{t}^{n}\right)

$$

优化每一个动作的输出。所以我们在编写代码时会设计一个函数,这个函数的输入是每个步骤获取的奖励,输出是每一个步骤的未来总奖励。因为未来总奖励可写为

$$

-

\begin{aligned}

G_{t} &=\sum_{k=t+1}^{T} \gamma^{k-t-1} r_{k} \\

&=r_{t+1}+\gamma G_{t+1}

diff --git a/docs/chapter9/chapter9.md b/docs/chapter9/chapter9.md

index 650749c..ea6bff4 100644

--- a/docs/chapter9/chapter9.md

+++ b/docs/chapter9/chapter9.md

@@ -1,4 +1,4 @@

-# 第 9 章演员-评论员算法

+# 第9章演员-评论员算法

在REINFORCE算法中,每次需要根据一个策略采集一条完整的轨迹,并计算这条轨迹上的回报。这种采样方式的方差比较大,学习效率也比较低。我们可以借鉴时序差分学习的思想,使用动态规划方法来提高采样效率,即从状态 $s$ 开始的总回报可以通过当前动作的即时奖励 $r(s,a,s')$ 和下一个状态 $s'$ 的值函数来近似估计。

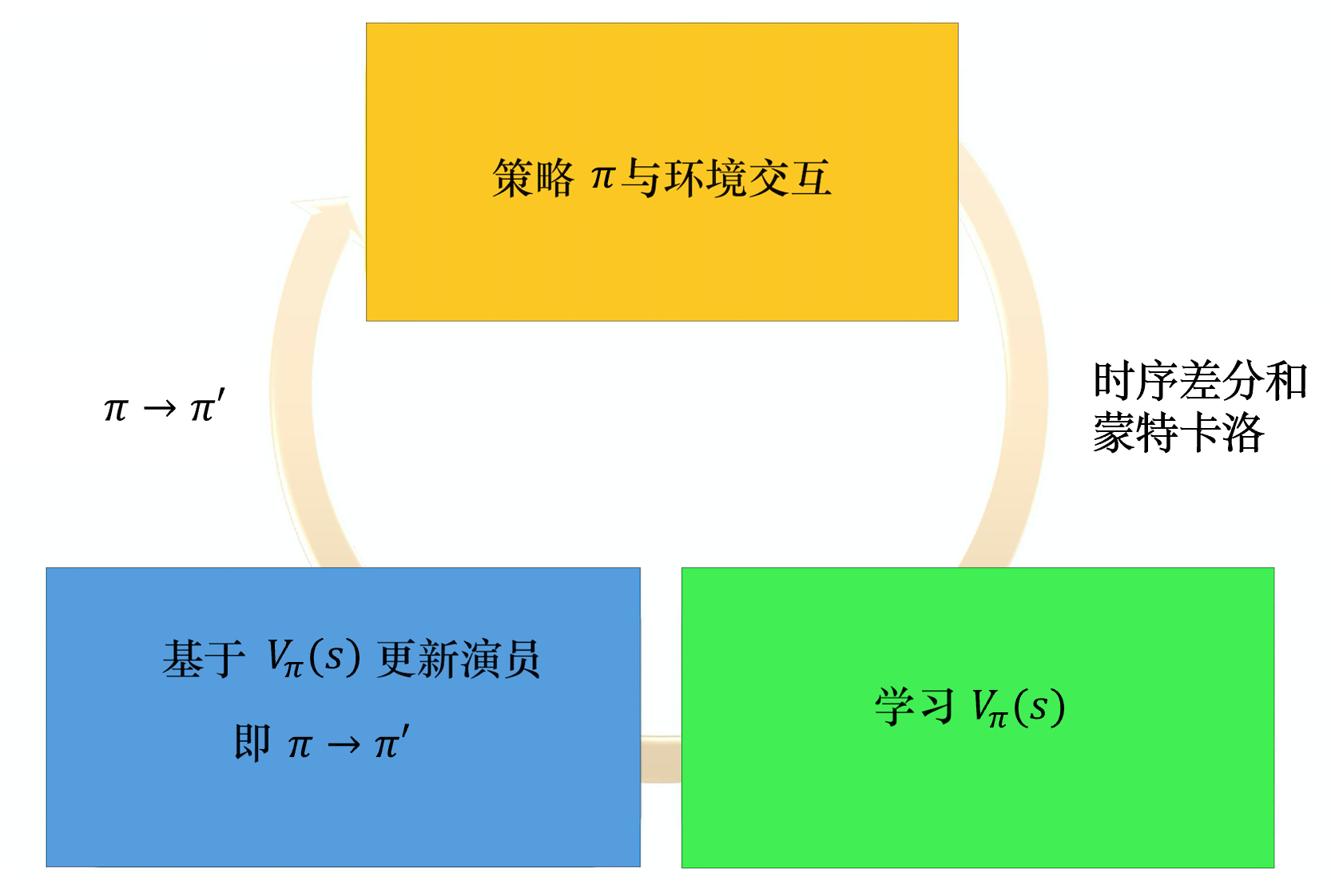

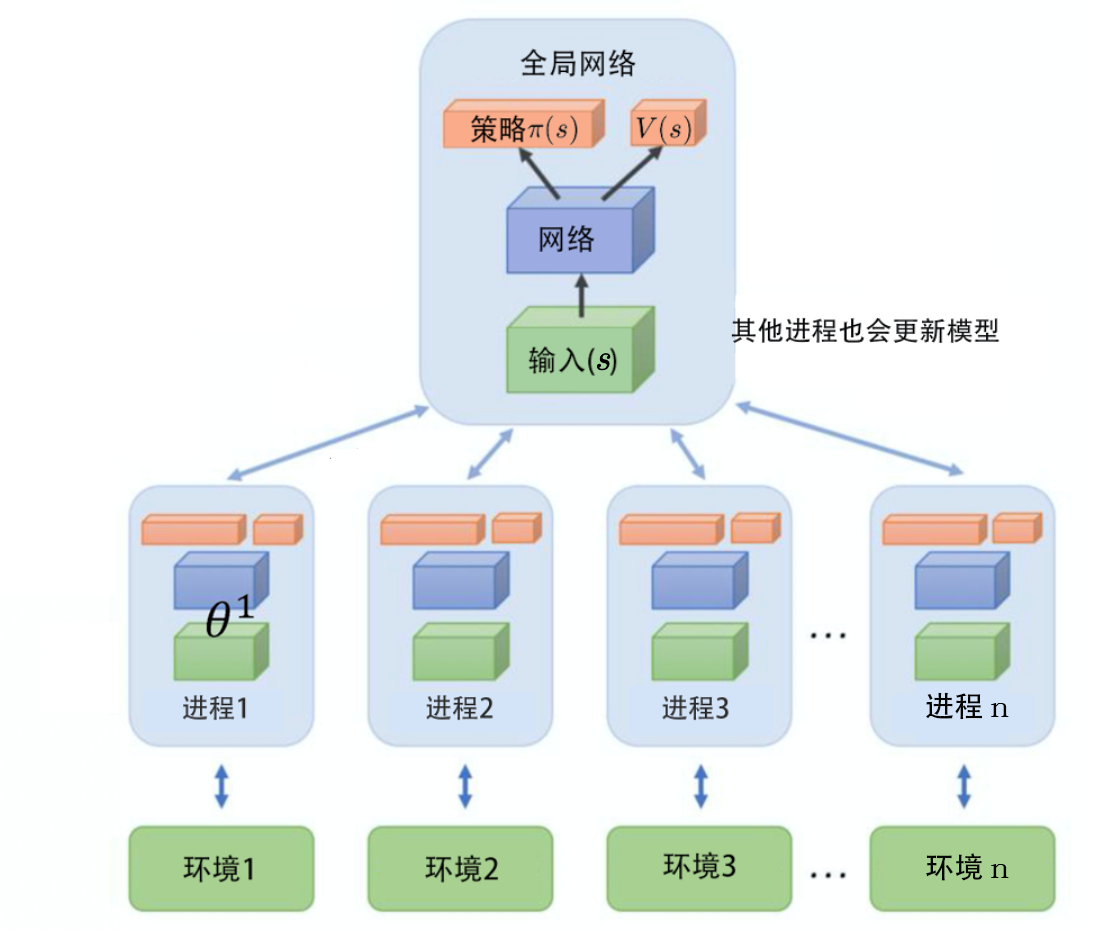

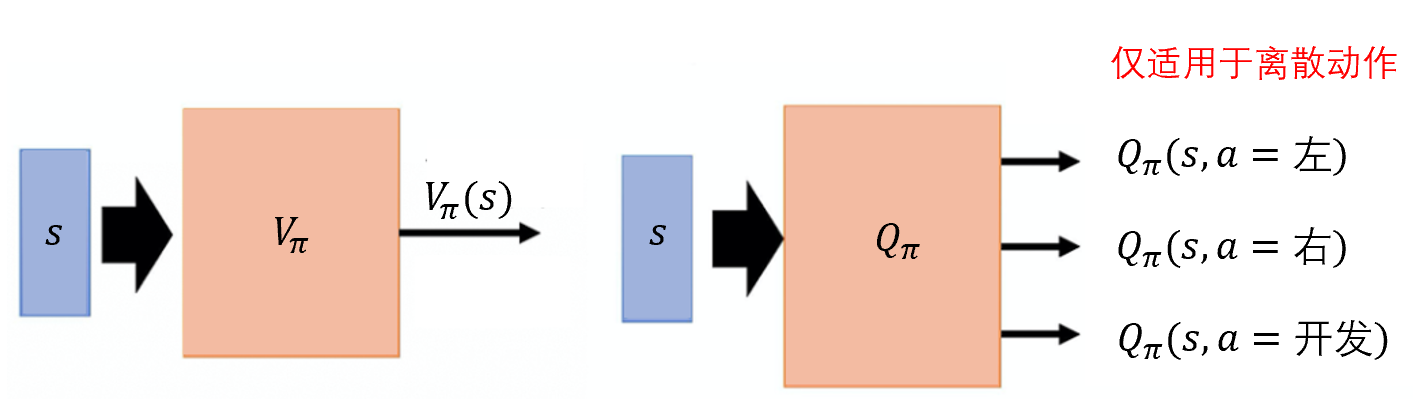

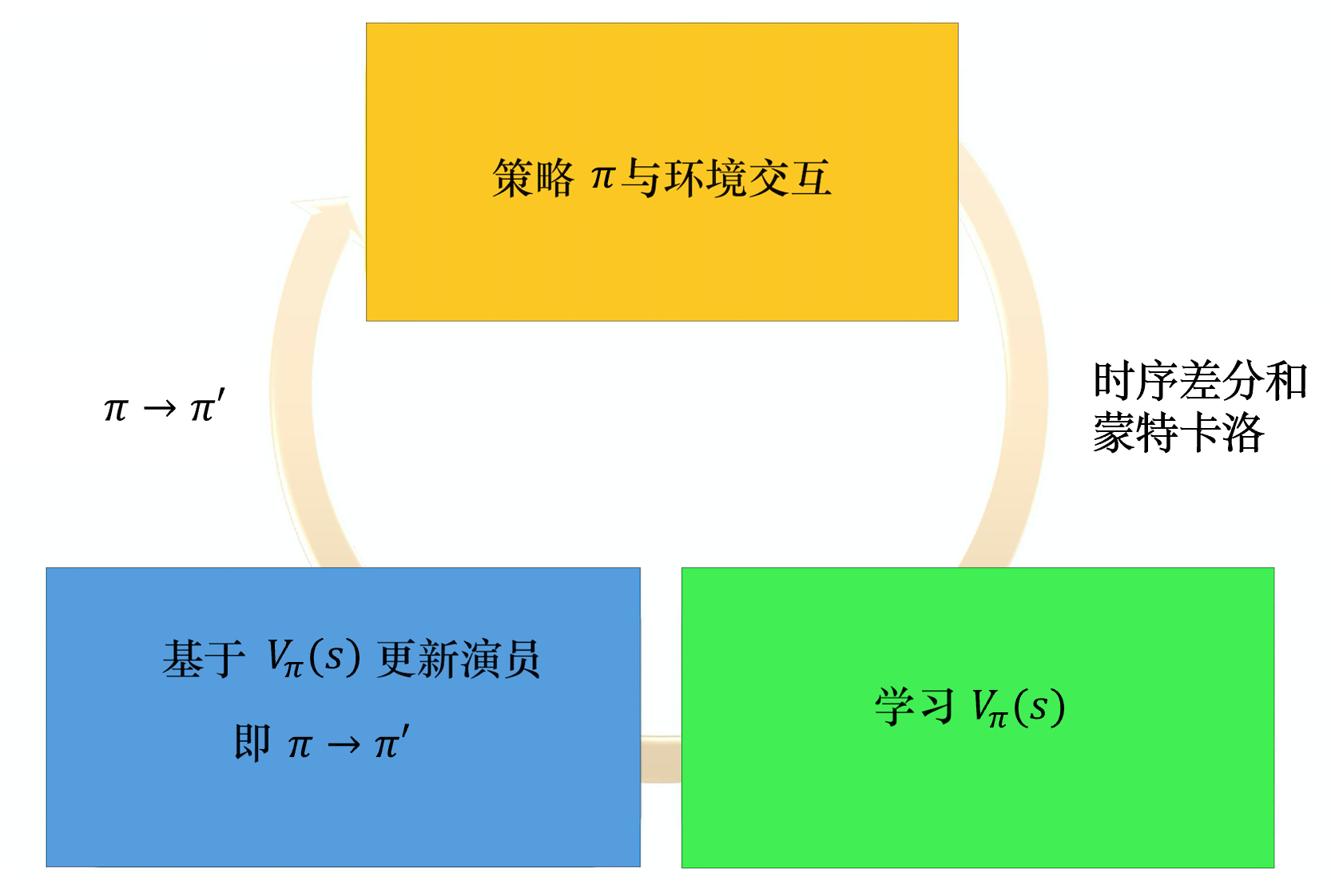

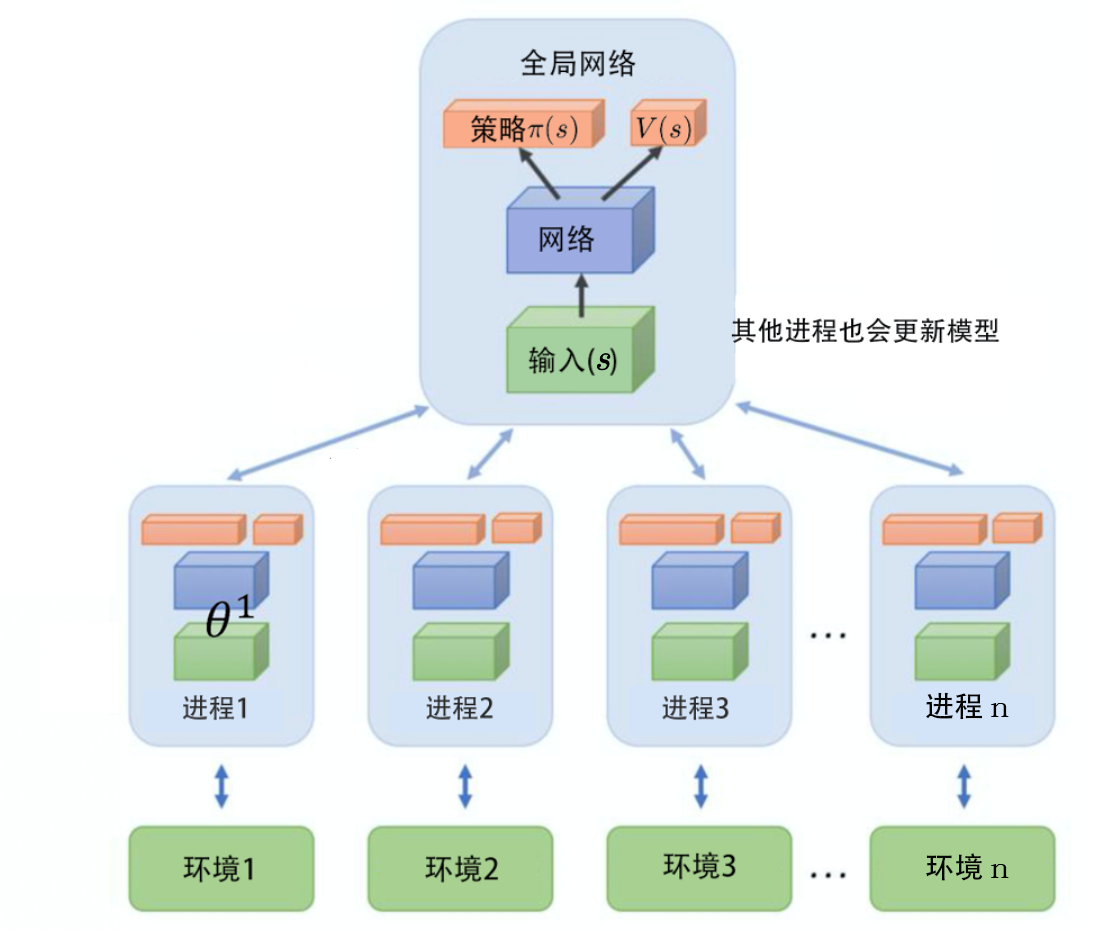

**演员-评论员算法**是一种结合**策略梯度**和**时序差分学习**的强化学习方法,其中,演员是指策略函数 $\pi_{\theta}(a|s)$,即学习一个策略以得到尽可能高的回报。评论员是指价值函数 $V_{\pi}(s)$,对当前策略的值函数进行估计,即评估演员的好坏。借助于价值函数,演员-评论员算法可以进行单步参数更新,不需要等到回合结束才进行更新。在演员-评论员算法里面,最知名的算法就是异步优势演员-评论员算法。如果我们去掉异步,则为**优势演员-评论员(advantage actor-critic,A2C)算法**。A2C算法又被译作优势演员-评论员算法。如果我们加了异步,变成异步优势演员-评论员算法。

@@ -17,7 +17,7 @@ $$

-

+

图 9.1 策略梯度回顾

@@ -33,7 +33,7 @@ A:这里就需要引入基于价值的(value-based)的方法。基于价

-

+

图 9.2 深度Q网络

@@ -50,7 +50,7 @@ $V_{\pi_{\theta}}\left(s_{t}^{n}\right)$ 是 $Q_{\pi_{\theta}}\left(s_{t}^{n}, a

-

+

图 9.3 优势演员-评论员算法

@@ -84,7 +84,7 @@ $$

-

+

图 9.4 优势评论员-评论员算法流程

@@ -94,7 +94,7 @@ $$

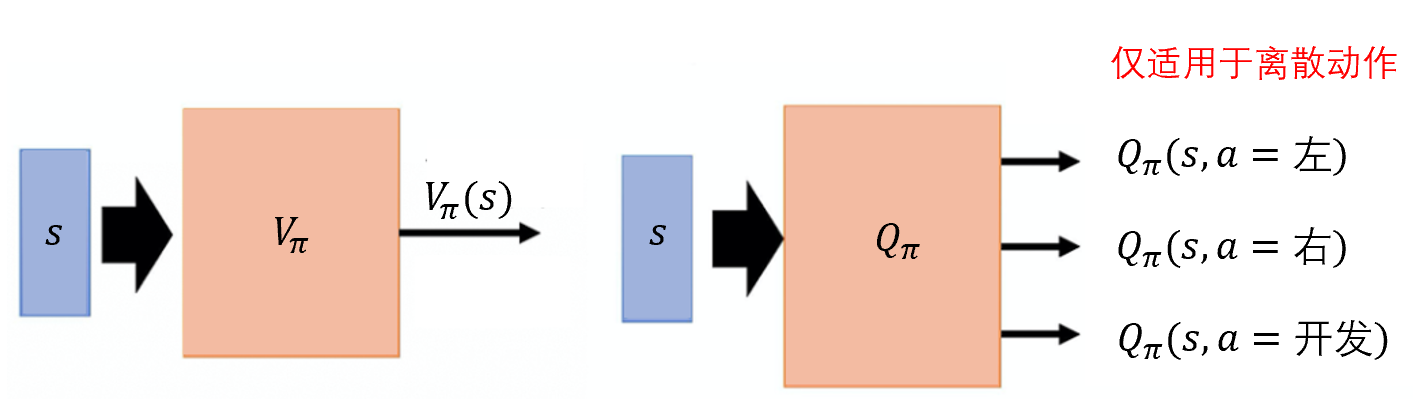

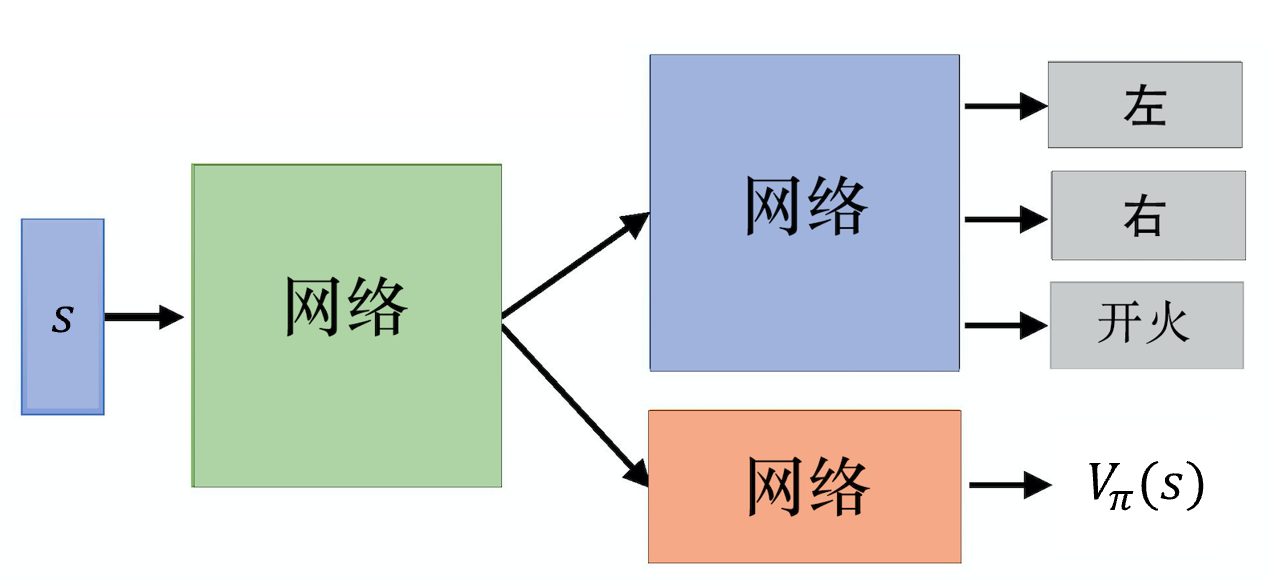

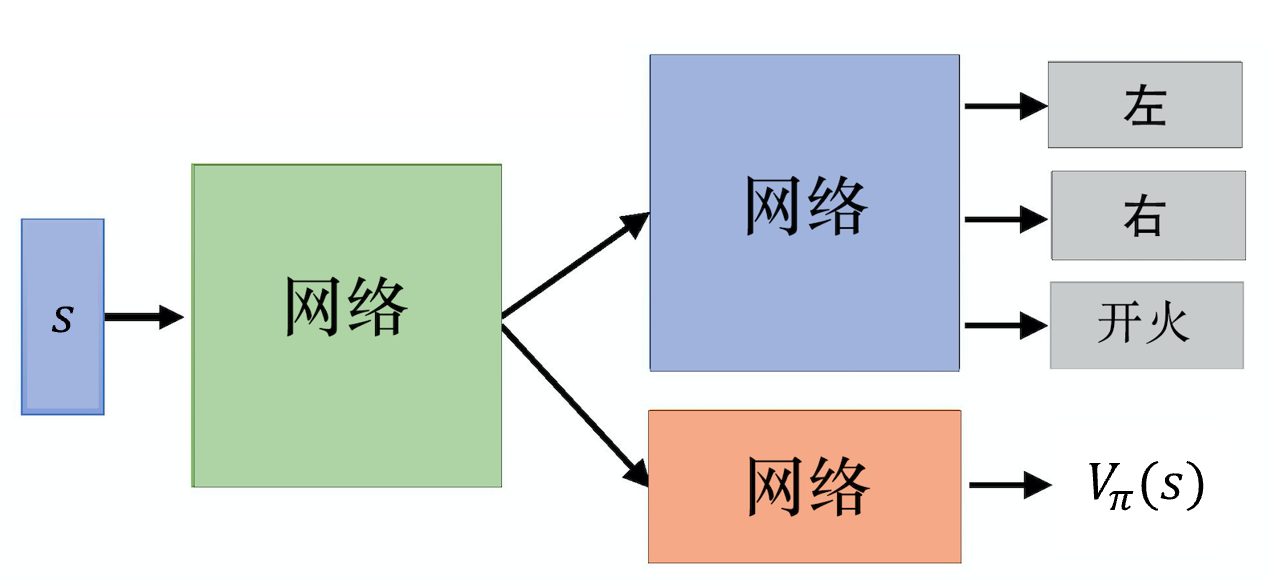

图 9.5 所示为离散动作的例子,连续动作的情况也是一样的。输入一个状态,网络决定现在要采取哪一个动作。演员网络和评论员网络的输入都是 $s$,所以它们前面几个层(layer)是可以共享的。

-

+

图 9.5 离散动作的例子

@@ -108,7 +108,7 @@ $$

-

+

图 9.6 影分身例子

@@ -122,7 +122,7 @@ $$

-

+

图 9.7 异步优势演员-评论员算法的运作流程

@@ -143,7 +143,7 @@ $$

-

+

图 9.8 路径衍生策略梯度

@@ -152,7 +152,7 @@ $$

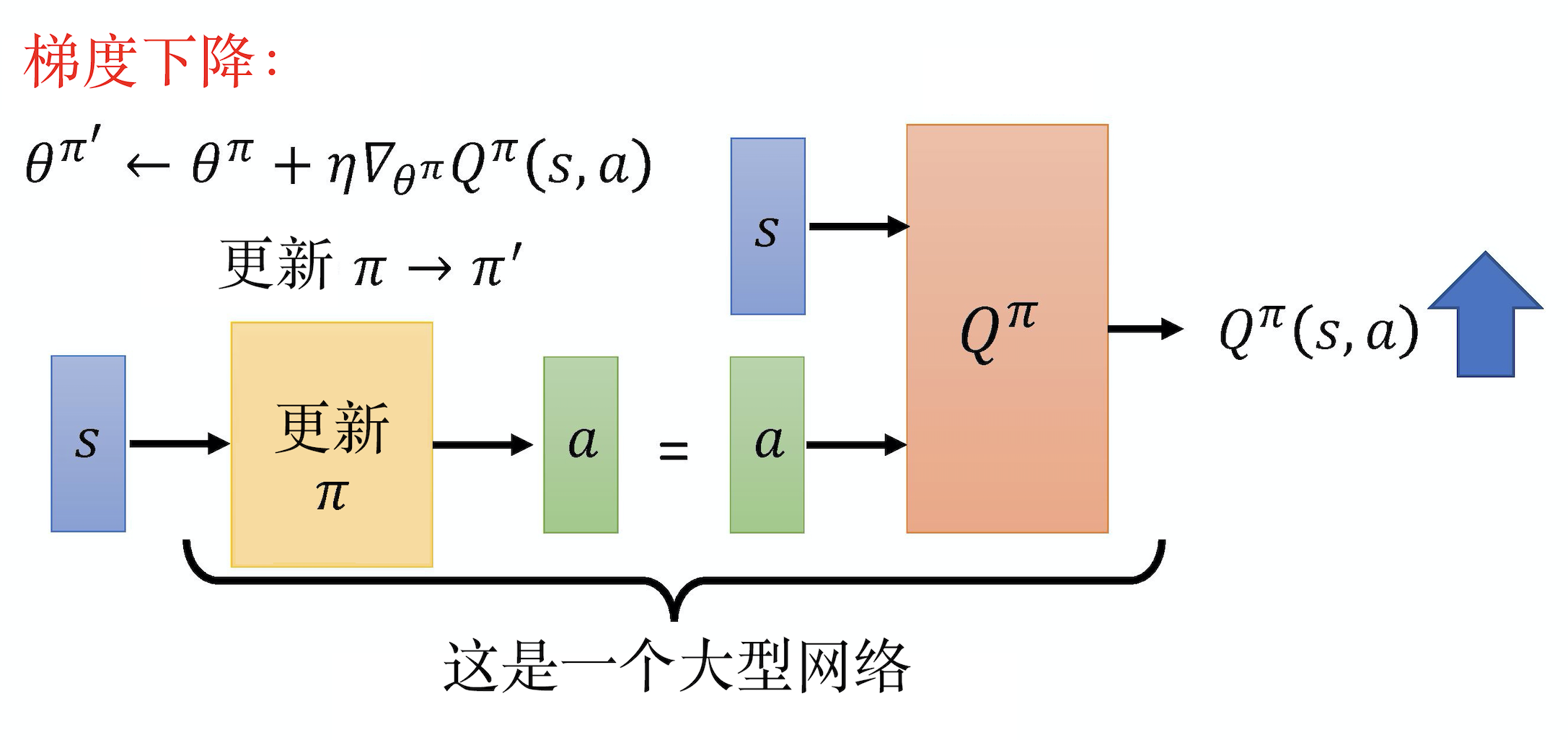

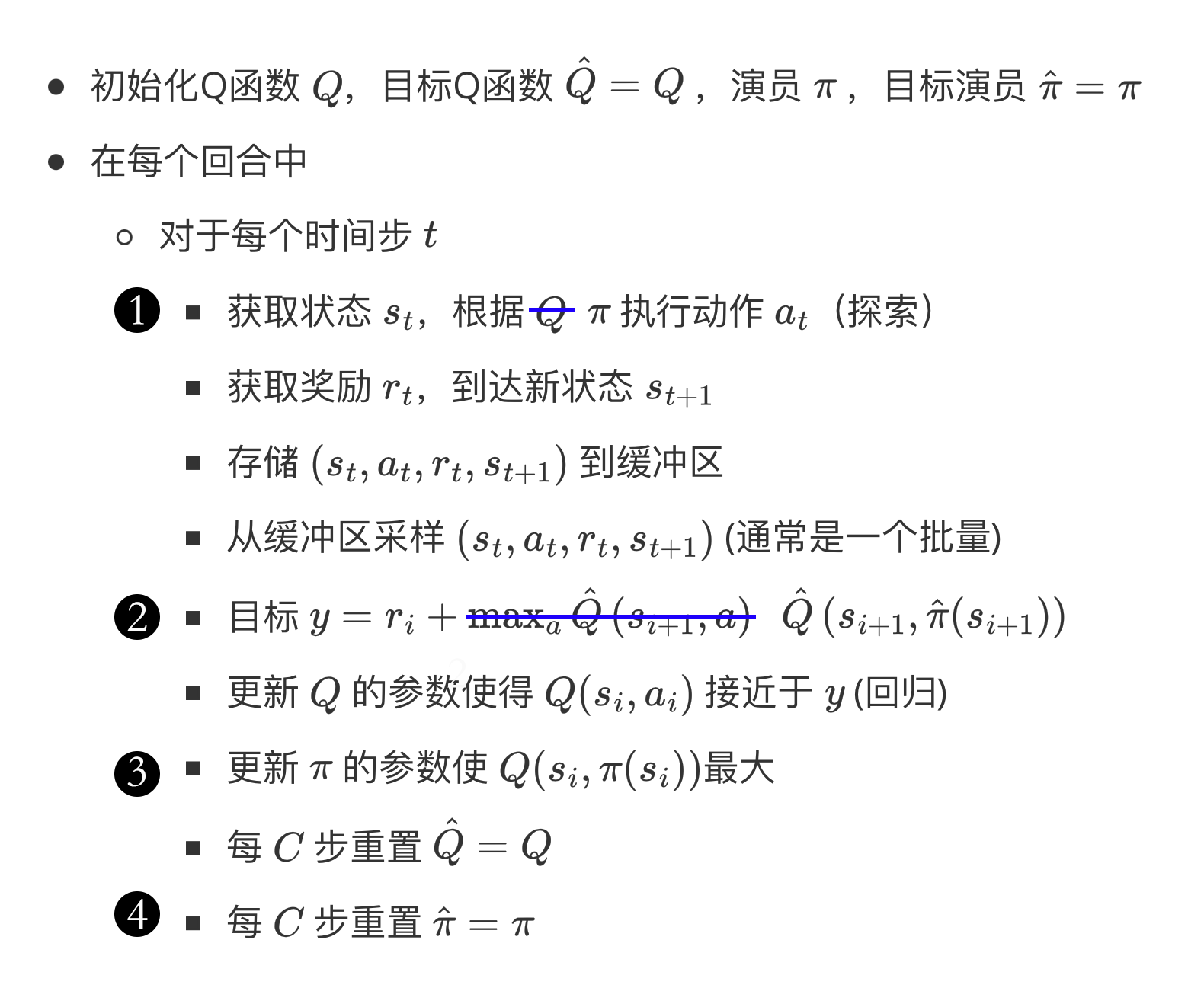

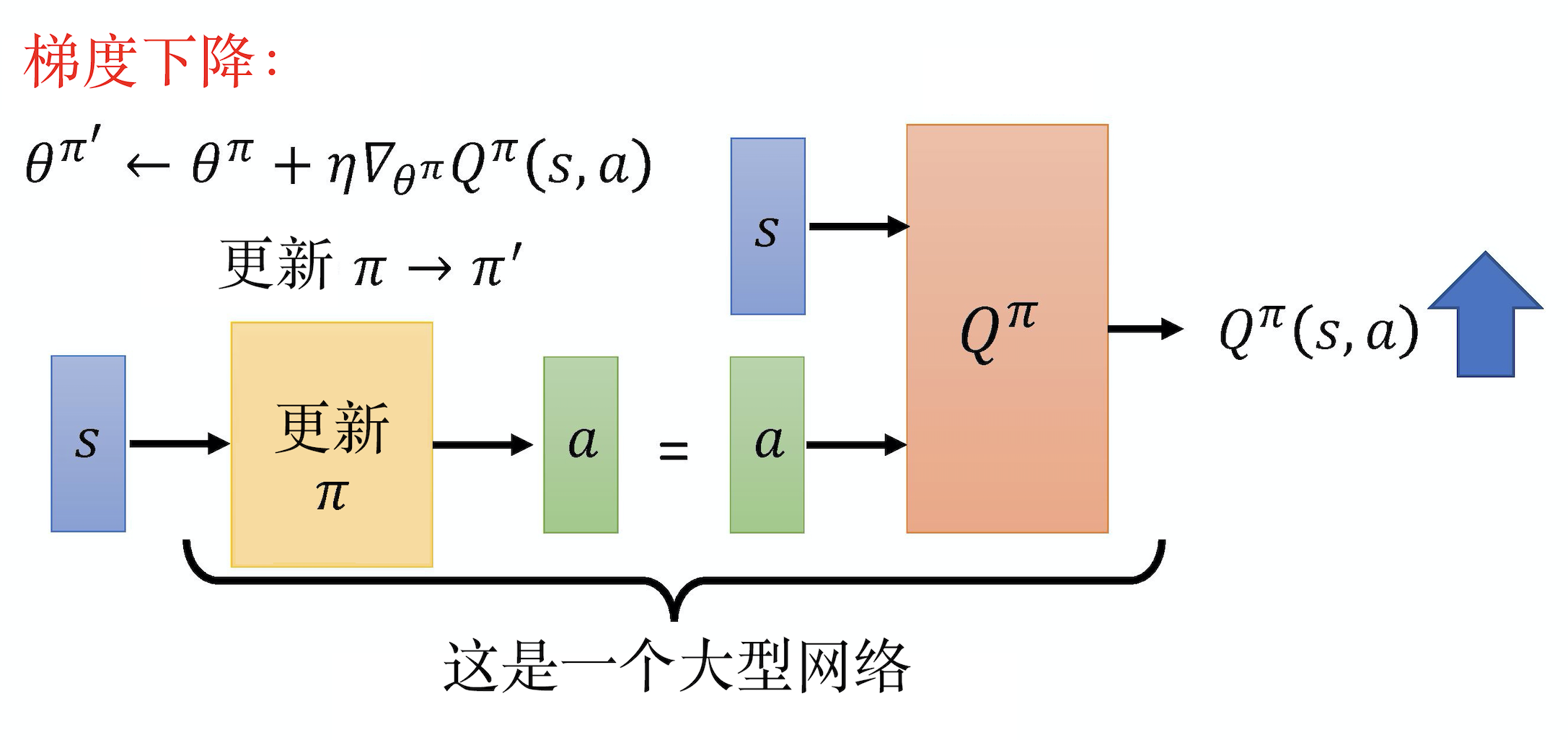

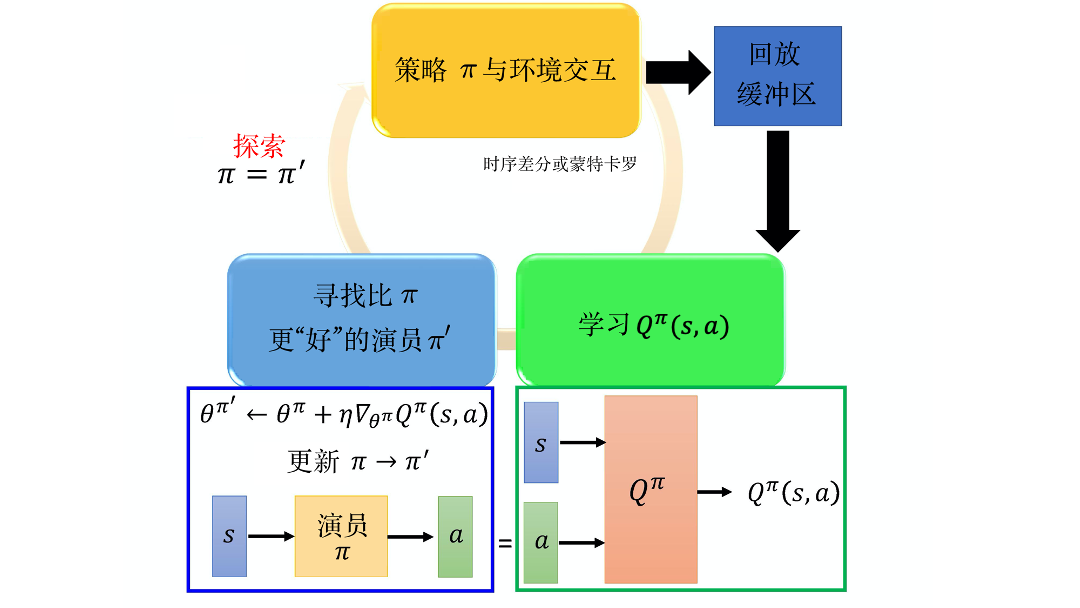

我们来看一下路径衍生策略梯度算法。如图 9.9 所示,一开始会有一个策略 $\pi$,它与环境交互并估计 Q 值。估计完 Q 值以后,我们就把 Q 值固定,只去学习一个演员。假设这个 Q 值估得很准,它知道在某一个状态采取什么样的动作会得到很大的Q值。接下来就学习这个演员,演员在给定 $s$ 的时候,采取了 $a$,可以让最后Q函数算出来的值越大越好。我们用准则(criteria)去更新策略 $\pi$,用新的 $\pi$ 与环境交互,再估计 Q值,得到新的 $\pi$ 去最大化 Q值的输出。深度Q网络 里面的技巧,在这里也几乎都用得上,比如经验回放、探索等技巧。

-

+

图 9.9 路径衍生策略梯度算法

@@ -160,7 +160,7 @@ $$

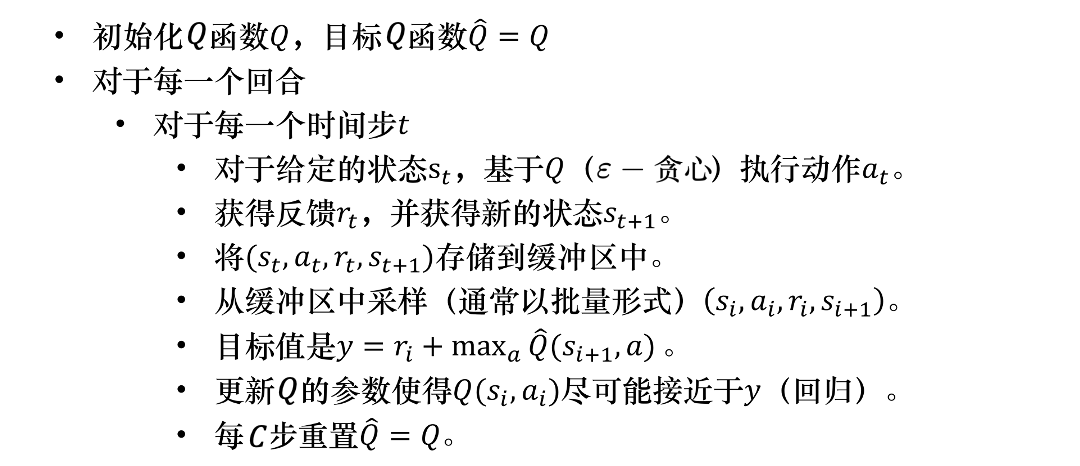

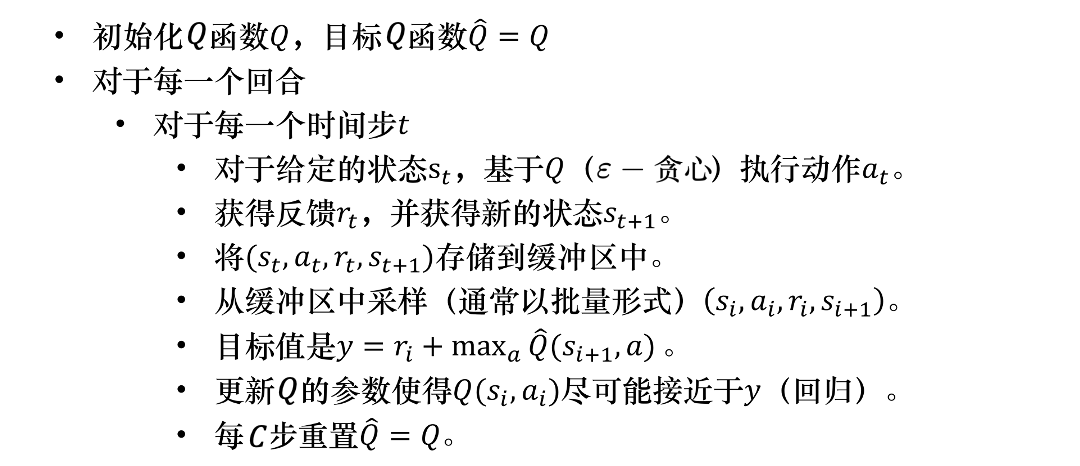

图 9.10 所示为原来深度Q网络的算法。我们有一个Q函数 $Q$ 和另外一个目标Q函数 $\hat{Q}$。每一次训练,在每一个回合的每一个时间点,我们会看到一个状态 $s_t$,会采取某一个动作 $a_{t}$。至于采取哪一个动作是由Q函数所决定的。如果是离散动作,我们看哪一个动作 $a$ 可以让 Q 值最大,就采取哪一个动作。当然,我们需要加一些探索,这样表现才会好。我们会得到奖励 $r_t$,进入新的状态 $s_{t+1}$,然后把 $(s_t$,$a_{t}$,$r_t$,$s_{t+1})$ 放到回放缓冲区里。接下来,我们会从回放缓冲区中采样一个批量的数据,在这个批量数据里面,可能某一笔数据是 $(s_i, a_i, r_i, s_{i+1})$。接下来我们会算一个目标 $y$ ,$y=r_{i}+\max _{a} \hat{Q}\left(s_{i+1}, a\right)$。怎么学习 Q 呢?我们希望 $Q(s_i,a_i)$ 与 $y$ 越接近越好,这是一个回归问题,最后每 $C$ 步,要用 $Q$ 替代 $\hat{Q}$ 。

-

+

图 9.10 深度Q网络算法

@@ -182,7 +182,7 @@ $$

-

+

图 9.11 从深度Q网络到路径衍生策略梯度

@@ -196,7 +196,7 @@ $$

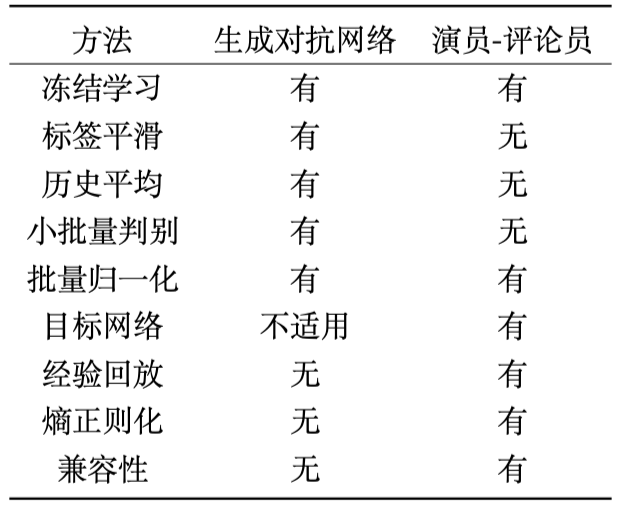

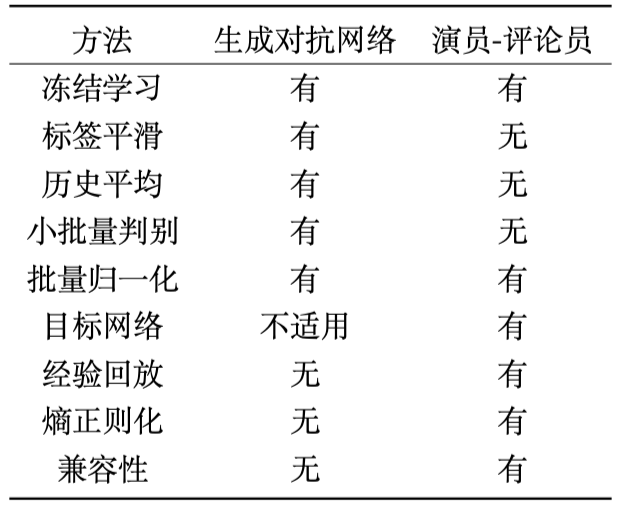

表 9.1 与生成对抗网络的联系

-

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+