Compare commits

103 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

9898932f09 | ||

|

|

c4fc22054b | ||

|

|

449e900837 | ||

|

|

e3ebbec947 | ||

|

|

65a9521ab1 | ||

|

|

b79a600c0d | ||

|

|

30d33fe8f7 | ||

|

|

b325fc1f01 | ||

|

|

954fb02c0c | ||

|

|

5ee398d6b5 | ||

|

|

b754c11814 | ||

|

|

5d19ae594d | ||

|

|

bfa8ed3144 | ||

|

|

0ec23aaa38 | ||

|

|

878ae46d77 | ||

|

|

766e6bbd88 | ||

|

|

0107c7d624 | ||

|

|

d0cf2d2193 | ||

|

|

d1403af548 | ||

|

|

bc20b09f60 | ||

|

|

8e2c0c3686 | ||

|

|

446e1bf7d0 | ||

|

|

54437236f0 | ||

|

|

9ed57a8ae9 | ||

|

|

c66a53ade1 | ||

|

|

7aec4c4b84 | ||

|

|

cfb3511360 | ||

|

|

2adcfacf27 | ||

|

|

09dc684ff6 | ||

|

|

1bc924a6ac | ||

|

|

00db4741bc | ||

|

|

1086447369 | ||

|

|

642c8103c7 | ||

|

|

b053ae614c | ||

|

|

b7583afc9b | ||

|

|

731b08f843 | ||

|

|

64f235aaff | ||

|

|

f0d5a2a45d | ||

|

|

01521fe390 | ||

|

|

a33b882592 | ||

|

|

150b81453c | ||

|

|

a6df479b78 | ||

|

|

dd6445b2ba | ||

|

|

41051a915b | ||

|

|

32ce390939 | ||

|

|

8deec6a6c0 | ||

|

|

0fab70ff3d | ||

|

|

53bbb99a64 | ||

|

|

0e712de805 | ||

|

|

6f74254e96 | ||

|

|

4220bd708b | ||

|

|

3802d88972 | ||

|

|

8cddbf1e1b | ||

|

|

332326e5f6 | ||

|

|

27f64a81d0 | ||

|

|

7e3fa5ade8 | ||

|

|

cc362a2a26 | ||

|

|

dde6167b05 | ||

|

|

fe69f42f92 | ||

|

|

6b050cef43 | ||

|

|

c721c3c769 | ||

|

|

9f8702ca12 | ||

|

|

153b3a35b8 | ||

|

|

88e543a16f | ||

|

|

5906af6d95 | ||

|

|

39953f1870 | ||

|

|

047618a0df | ||

|

|

2da51a51d0 | ||

|

|

8c0e0a296d | ||

|

|

ce0ac607c2 | ||

|

|

f0437cf6af | ||

|

|

32bfc57eed | ||

|

|

909ca96915 | ||

|

|

341ab5b2bf | ||

|

|

d899a19419 | ||

|

|

61b0bc40de | ||

|

|

6fde3f98dd | ||

|

|

838eb9c8db | ||

|

|

687bbfce10 | ||

|

|

4b35113932 | ||

|

|

d672d4d0d7 | ||

|

|

1d3845bb91 | ||

|

|

e5effca854 | ||

|

|

bae82898da | ||

|

|

2e8e7151e3 | ||

|

|

8db74bc34d | ||

|

|

e18392d7d3 | ||

|

|

e4e32c06df | ||

|

|

09802c5632 | ||

|

|

584db78fd0 | ||

|

|

56a41604cb | ||

|

|

8228084a1d | ||

|

|

f16def5f3a | ||

|

|

c0303a57a1 | ||

|

|

07c8a7fb0e | ||

|

|

71691e1fe9 | ||

|

|

e2569e4541 | ||

|

|

51385491de | ||

|

|

bb049714cf | ||

|

|

5dcaa20a6c | ||

|

|

26652bf2ed | ||

|

|

352d2fa28a | ||

|

|

ff5ac0d599 |

107

ADC_function.py

Normal file → Executable file

107

ADC_function.py

Normal file → Executable file

@@ -1,42 +1,99 @@

|

|||||||

|

# -*- coding: utf-8 -*-

|

||||||

|

|

||||||

import requests

|

import requests

|

||||||

from configparser import RawConfigParser

|

from configparser import ConfigParser

|

||||||

import os

|

import os

|

||||||

import re

|

import re

|

||||||

|

import time

|

||||||

|

import sys

|

||||||

|

|

||||||

# content = open('proxy.ini').read()

|

config_file='config.ini'

|

||||||

# content = re.sub(r"\xfe\xff","", content)

|

config = ConfigParser()

|

||||||

# content = re.sub(r"\xff\xfe","", content)

|

|

||||||

# content = re.sub(r"\xef\xbb\xbf","", content)

|

|

||||||

# open('BaseConfig.cfg', 'w').write(content)

|

|

||||||

|

|

||||||

config = RawConfigParser()

|

if os.path.exists(config_file):

|

||||||

if os.path.exists('proxy.ini'):

|

try:

|

||||||

config.read('proxy.ini', encoding='UTF-8')

|

config.read(config_file, encoding='UTF-8')

|

||||||

|

except:

|

||||||

|

print('[-]Config.ini read failed! Please use the offical file!')

|

||||||

else:

|

else:

|

||||||

with open("proxy.ini", "wt", encoding='UTF-8') as code:

|

print('[+]config.ini: not found, creating...')

|

||||||

|

with open("config.ini", "wt", encoding='UTF-8') as code:

|

||||||

|

print("[common]", file=code)

|

||||||

|

print("main_mode=1", file=code)

|

||||||

|

print("failed_output_folder=failed", file=code)

|

||||||

|

print("success_output_folder=JAV_output", file=code)

|

||||||

|

print("", file=code)

|

||||||

print("[proxy]",file=code)

|

print("[proxy]",file=code)

|

||||||

print("proxy=127.0.0.1:1080",file=code)

|

print("proxy=127.0.0.1:1080",file=code)

|

||||||

|

print("timeout=10", file=code)

|

||||||

|

print("retry=3", file=code)

|

||||||

|

print("", file=code)

|

||||||

|

print("[Name_Rule]", file=code)

|

||||||

|

print("location_rule=actor+'/'+number",file=code)

|

||||||

|

print("naming_rule=number+'-'+title",file=code)

|

||||||

|

print("", file=code)

|

||||||

|

print("[update]",file=code)

|

||||||

|

print("update_check=1",file=code)

|

||||||

|

print("", file=code)

|

||||||

|

print("[media]", file=code)

|

||||||

|

print("media_warehouse=emby", file=code)

|

||||||

|

print("#emby or plex", file=code)

|

||||||

|

print("#plex only test!", file=code)

|

||||||

|

print("", file=code)

|

||||||

|

print("[directory_capture]", file=code)

|

||||||

|

print("directory=", file=code)

|

||||||

|

print("", file=code)

|

||||||

|

time.sleep(2)

|

||||||

|

print('[+]config.ini: created!')

|

||||||

|

try:

|

||||||

|

config.read(config_file, encoding='UTF-8')

|

||||||

|

except:

|

||||||

|

print('[-]Config.ini read failed! Please use the offical file!')

|

||||||

|

|

||||||

|

def ReadMediaWarehouse():

|

||||||

|

return config['media']['media_warehouse']

|

||||||

|

|

||||||

|

def UpdateCheckSwitch():

|

||||||

|

check=str(config['update']['update_check'])

|

||||||

|

if check == '1':

|

||||||

|

return '1'

|

||||||

|

elif check == '0':

|

||||||

|

return '0'

|

||||||

|

elif check == '':

|

||||||

|

return '0'

|

||||||

def get_html(url,cookies = None):#网页请求核心

|

def get_html(url,cookies = None):#网页请求核心

|

||||||

|

try:

|

||||||

|

proxy = config['proxy']['proxy']

|

||||||

|

timeout = int(config['proxy']['timeout'])

|

||||||

|

retry_count = int(config['proxy']['retry'])

|

||||||

|

except:

|

||||||

|

print('[-]Proxy config error! Please check the config.')

|

||||||

|

i = 0

|

||||||

|

while i < retry_count:

|

||||||

|

try:

|

||||||

if not str(config['proxy']['proxy']) == '':

|

if not str(config['proxy']['proxy']) == '':

|

||||||

proxies = {

|

proxies = {"http": "http://" + proxy,"https": "https://" + proxy}

|

||||||

"http" : "http://" + str(config['proxy']['proxy']),

|

|

||||||

"https": "https://" + str(config['proxy']['proxy'])

|

|

||||||

}

|

|

||||||

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/60.0.3100.0 Safari/537.36'}

|

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/60.0.3100.0 Safari/537.36'}

|

||||||

getweb = requests.get(str(url), headers=headers, proxies=proxies,cookies=cookies)

|

getweb = requests.get(str(url), headers=headers, timeout=timeout,proxies=proxies, cookies=cookies)

|

||||||

getweb.encoding = 'utf-8'

|

getweb.encoding = 'utf-8'

|

||||||

# print(getweb.text)

|

|

||||||

try:

|

|

||||||

return getweb.text

|

return getweb.text

|

||||||

except:

|

|

||||||

print('[-]Connected failed!:Proxy error')

|

|

||||||

else:

|

else:

|

||||||

headers = {

|

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36'}

|

||||||

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36'}

|

getweb = requests.get(str(url), headers=headers, timeout=timeout, cookies=cookies)

|

||||||

getweb = requests.get(str(url), headers=headers,cookies=cookies)

|

|

||||||

getweb.encoding = 'utf-8'

|

getweb.encoding = 'utf-8'

|

||||||

try:

|

|

||||||

return getweb.text

|

return getweb.text

|

||||||

except:

|

except requests.exceptions.RequestException:

|

||||||

print("[-]Connect Failed.")

|

i += 1

|

||||||

|

print('[-]Connect retry '+str(i)+'/'+str(retry_count))

|

||||||

|

except requests.exceptions.ConnectionError:

|

||||||

|

i += 1

|

||||||

|

print('[-]Connect retry '+str(i)+'/'+str(retry_count))

|

||||||

|

except requests.exceptions.ProxyError:

|

||||||

|

i += 1

|

||||||

|

print('[-]Connect retry '+str(i)+'/'+str(retry_count))

|

||||||

|

except requests.exceptions.ConnectTimeout:

|

||||||

|

i += 1

|

||||||

|

print('[-]Connect retry '+str(i)+'/'+str(retry_count))

|

||||||

|

print('[-]Connect Failed! Please check your Proxy or Network!')

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

153

AV_Data_Capture.py

Normal file → Executable file

153

AV_Data_Capture.py

Normal file → Executable file

@@ -1,3 +1,6 @@

|

|||||||

|

#!/usr/bin/env python3

|

||||||

|

# -*- coding: utf-8 -*-

|

||||||

|

|

||||||

import glob

|

import glob

|

||||||

import os

|

import os

|

||||||

import time

|

import time

|

||||||

@@ -5,11 +8,24 @@ import re

|

|||||||

import sys

|

import sys

|

||||||

from ADC_function import *

|

from ADC_function import *

|

||||||

import json

|

import json

|

||||||

|

import shutil

|

||||||

|

from configparser import ConfigParser

|

||||||

|

os.chdir(os.getcwd())

|

||||||

|

|

||||||

version='0.10.6'

|

# ============global var===========

|

||||||

|

|

||||||

|

version='1.1'

|

||||||

|

|

||||||

|

config = ConfigParser()

|

||||||

|

config.read(config_file, encoding='UTF-8')

|

||||||

|

|

||||||

|

Platform = sys.platform

|

||||||

|

|

||||||

|

# ==========global var end=========

|

||||||

|

|

||||||

def UpdateCheck():

|

def UpdateCheck():

|

||||||

html2 = get_html('https://raw.githubusercontent.com/wenead99/AV_Data_Capture/master/update_check.json')

|

if UpdateCheckSwitch() == '1':

|

||||||

|

html2 = get_html('https://raw.githubusercontent.com/yoshiko2/AV_Data_Capture/master/update_check.json')

|

||||||

html = json.loads(str(html2))

|

html = json.loads(str(html2))

|

||||||

|

|

||||||

if not version == html['version']:

|

if not version == html['version']:

|

||||||

@@ -17,34 +33,51 @@ def UpdateCheck():

|

|||||||

print('[*] * Download *')

|

print('[*] * Download *')

|

||||||

print('[*] ' + html['download'])

|

print('[*] ' + html['download'])

|

||||||

print('[*]=====================================')

|

print('[*]=====================================')

|

||||||

|

else:

|

||||||

|

print('[+]Update Check disabled!')

|

||||||

def movie_lists():

|

def movie_lists():

|

||||||

#MP4

|

directory = config['directory_capture']['directory']

|

||||||

a2 = glob.glob(os.getcwd() + r"\*.mp4")

|

mp4=[]

|

||||||

# AVI

|

avi=[]

|

||||||

b2 = glob.glob(os.getcwd() + r"\*.avi")

|

rmvb=[]

|

||||||

# RMVB

|

wmv=[]

|

||||||

c2 = glob.glob(os.getcwd() + r"\*.rmvb")

|

mov=[]

|

||||||

# WMV

|

mkv=[]

|

||||||

d2 = glob.glob(os.getcwd() + r"\*.wmv")

|

flv=[]

|

||||||

# MOV

|

ts=[]

|

||||||

e2 = glob.glob(os.getcwd() + r"\*.mov")

|

if directory=='*':

|

||||||

# MKV

|

for i in os.listdir(os.getcwd()):

|

||||||

f2 = glob.glob(os.getcwd() + r"\*.mkv")

|

mp4 += glob.glob(r"./" + i + "/*.mp4")

|

||||||

# FLV

|

avi += glob.glob(r"./" + i + "/*.avi")

|

||||||

g2 = glob.glob(os.getcwd() + r"\*.flv")

|

rmvb += glob.glob(r"./" + i + "/*.rmvb")

|

||||||

# TS

|

wmv += glob.glob(r"./" + i + "/*.wmv")

|

||||||

h2 = glob.glob(os.getcwd() + r"\*.ts")

|

mov += glob.glob(r"./" + i + "/*.mov")

|

||||||

|

mkv += glob.glob(r"./" + i + "/*.mkv")

|

||||||

total = a2+b2+c2+d2+e2+f2+g2+h2

|

flv += glob.glob(r"./" + i + "/*.flv")

|

||||||

|

ts += glob.glob(r"./" + i + "/*.ts")

|

||||||

|

total = mp4 + avi + rmvb + wmv + mov + mkv + flv + ts

|

||||||

return total

|

return total

|

||||||

|

mp4 = glob.glob(r"./" + directory + "/*.mp4")

|

||||||

|

avi = glob.glob(r"./" + directory + "/*.avi")

|

||||||

|

rmvb = glob.glob(r"./" + directory + "/*.rmvb")

|

||||||

|

wmv = glob.glob(r"./" + directory + "/*.wmv")

|

||||||

|

mov = glob.glob(r"./" + directory + "/*.mov")

|

||||||

|

mkv = glob.glob(r"./" + directory + "/*.mkv")

|

||||||

|

flv = glob.glob(r"./" + directory + "/*.flv")

|

||||||

|

ts = glob.glob(r"./" + directory + "/*.ts")

|

||||||

|

total = mp4 + avi + rmvb + wmv + mov + mkv + flv + ts

|

||||||

|

return total

|

||||||

|

def CreatFailedFolder():

|

||||||

|

if not os.path.exists('failed/'): # 新建failed文件夹

|

||||||

|

try:

|

||||||

|

os.makedirs('failed/')

|

||||||

|

except:

|

||||||

|

print("[-]failed!can not be make folder 'failed'\n[-](Please run as Administrator)")

|

||||||

|

os._exit(0)

|

||||||

def lists_from_test(custom_nuber): #电影列表

|

def lists_from_test(custom_nuber): #电影列表

|

||||||

|

|

||||||

a=[]

|

a=[]

|

||||||

a.append(custom_nuber)

|

a.append(custom_nuber)

|

||||||

return a

|

return a

|

||||||

|

|

||||||

def CEF(path):

|

def CEF(path):

|

||||||

files = os.listdir(path) # 获取路径下的子文件(夹)列表

|

files = os.listdir(path) # 获取路径下的子文件(夹)列表

|

||||||

for file in files:

|

for file in files:

|

||||||

@@ -53,29 +86,81 @@ def CEF(path):

|

|||||||

print('[+]Deleting empty folder',path + '/' + file)

|

print('[+]Deleting empty folder',path + '/' + file)

|

||||||

except:

|

except:

|

||||||

a=''

|

a=''

|

||||||

|

|

||||||

def rreplace(self, old, new, *max):

|

def rreplace(self, old, new, *max):

|

||||||

#从右开始替换文件名中内容,源字符串,将被替换的子字符串, 新字符串,用于替换old子字符串,可选字符串, 替换不超过 max 次

|

#从右开始替换文件名中内容,源字符串,将被替换的子字符串, 新字符串,用于替换old子字符串,可选字符串, 替换不超过 max 次

|

||||||

count = len(self)

|

count = len(self)

|

||||||

if max and str(max[0]).isdigit():

|

if max and str(max[0]).isdigit():

|

||||||

count = max[0]

|

count = max[0]

|

||||||

return new.join(self.rsplit(old, count))

|

return new.join(self.rsplit(old, count))

|

||||||

|

def getNumber(filepath):

|

||||||

|

try: # 普通提取番号 主要处理包含减号-的番号

|

||||||

|

try:

|

||||||

|

filepath1 = filepath.replace("_", "-")

|

||||||

|

filepath1.strip('22-sht.me').strip('-HD').strip('-hd')

|

||||||

|

filename = str(re.sub("\[\d{4}-\d{1,2}-\d{1,2}\] - ", "", filepath1)) # 去除文件名中时间

|

||||||

|

file_number = re.search('\w+-\d+', filename).group()

|

||||||

|

return file_number

|

||||||

|

except:

|

||||||

|

filepath1 = filepath.replace("_", "-")

|

||||||

|

filepath1.strip('22-sht.me').strip('-HD').strip('-hd')

|

||||||

|

filename = str(re.sub("\[\d{4}-\d{1,2}-\d{1,2}\] - ", "", filepath1)) # 去除文件名中时间

|

||||||

|

file_number = re.search('\w+-\w+', filename).group()

|

||||||

|

return file_number

|

||||||

|

except: # 提取不含减号-的番号

|

||||||

|

try:

|

||||||

|

filename1 = str(re.sub("ts6\d", "", filepath)).strip('Tokyo-hot').strip('tokyo-hot')

|

||||||

|

filename0 = str(re.sub(".*?\.com-\d+", "", filename1)).strip('_')

|

||||||

|

file_number = str(re.search('\w+\d{4}', filename0).group(0))

|

||||||

|

return file_number

|

||||||

|

except: # 提取无减号番号

|

||||||

|

filename1 = str(re.sub("ts6\d", "", filepath)) # 去除ts64/265

|

||||||

|

filename0 = str(re.sub(".*?\.com-\d+", "", filename1))

|

||||||

|

file_number2 = str(re.match('\w+', filename0).group())

|

||||||

|

file_number = str(file_number2.replace(re.match("^[A-Za-z]+", file_number2).group(),re.match("^[A-Za-z]+", file_number2).group() + '-'))

|

||||||

|

return file_number

|

||||||

|

|

||||||

|

def RunCore():

|

||||||

|

if Platform == 'win32':

|

||||||

|

if os.path.exists('core.py'):

|

||||||

|

os.system('python core.py' + ' "' + i + '" --number "' + getNumber(i) + '"') # 从py文件启动(用于源码py)

|

||||||

|

elif os.path.exists('core.exe'):

|

||||||

|

os.system('core.exe' + ' "' + i + '" --number "' + getNumber(i) + '"') # 从exe启动(用于EXE版程序)

|

||||||

|

elif os.path.exists('core.py') and os.path.exists('core.exe'):

|

||||||

|

os.system('python core.py' + ' "' + i + '" --number "' + getNumber(i) + '"') # 从py文件启动(用于源码py)

|

||||||

|

else:

|

||||||

|

if os.path.exists('core.py'):

|

||||||

|

os.system('python3 core.py' + ' "' + i + '" --number "' + getNumber(i) + '"') # 从py文件启动(用于源码py)

|

||||||

|

elif os.path.exists('core.exe'):

|

||||||

|

os.system('core.exe' + ' "' + i + '" --number "' + getNumber(i) + '"') # 从exe启动(用于EXE版程序)

|

||||||

|

elif os.path.exists('core.py') and os.path.exists('core.exe'):

|

||||||

|

os.system('python3 core.py' + ' "' + i + '" --number "' + getNumber(i) + '"') # 从py文件启动(用于源码py)

|

||||||

|

|

||||||

if __name__ =='__main__':

|

if __name__ =='__main__':

|

||||||

print('[*]===========AV Data Capture===========')

|

print('[*]===========AV Data Capture===========')

|

||||||

print('[*] Version '+version)

|

print('[*] Version '+version)

|

||||||

print('[*]=====================================')

|

print('[*]=====================================')

|

||||||

|

CreatFailedFolder()

|

||||||

UpdateCheck()

|

UpdateCheck()

|

||||||

os.chdir(os.getcwd())

|

os.chdir(os.getcwd())

|

||||||

for i in movie_lists(): #遍历电影列表 交给core处理

|

|

||||||

if '_' in i:

|

|

||||||

os.rename(re.search(r'[^\\/:*?"<>|\r\n]+$', i).group(), rreplace(re.search(r'[^\\/:*?"<>|\r\n]+$', i).group(), '_', '-', 1))

|

|

||||||

i = rreplace(re.search(r'[^\\/:*?"<>|\r\n]+$', i).group(), '_', '-', 1)

|

|

||||||

os.system('python core.py' + ' "' + i + '"') #选择从py文件启动 (用于源码py)

|

|

||||||

#os.system('core.exe' + ' "' + i + '"') #选择从exe文件启动(用于EXE版程序)

|

|

||||||

print("[*]=====================================")

|

|

||||||

|

|

||||||

print("[!]Cleaning empty folders")

|

count = 0

|

||||||

|

count_all = str(len(movie_lists()))

|

||||||

|

print('[+]Find',str(len(movie_lists())),'movies')

|

||||||

|

for i in movie_lists(): #遍历电影列表 交给core处理

|

||||||

|

count = count + 1

|

||||||

|

percentage = str(count/int(count_all)*100)[:4]+'%'

|

||||||

|

print('[!] - '+percentage+' ['+str(count)+'/'+count_all+'] -')

|

||||||

|

try:

|

||||||

|

print("[!]Making Data for [" + i + "], the number is [" + getNumber(i) + "]")

|

||||||

|

RunCore()

|

||||||

|

print("[*]=====================================")

|

||||||

|

except: # 番号提取异常

|

||||||

|

print('[-]' + i + ' Cannot catch the number :')

|

||||||

|

print('[-]Move ' + i + ' to failed folder')

|

||||||

|

shutil.move(i, str(os.getcwd()) + '/' + 'failed/')

|

||||||

|

continue

|

||||||

|

|

||||||

|

|

||||||

CEF('JAV_output')

|

CEF('JAV_output')

|

||||||

print("[+]All finished!!!")

|

print("[+]All finished!!!")

|

||||||

input("[+][+]Press enter key exit, you can check the error messge before you exit.\n[+][+]按回车键结束,你可以在结束之前查看错误信息。")

|

input("[+][+]Press enter key exit, you can check the error messge before you exit.\n[+][+]按回车键结束,你可以在结束之前查看和错误信息。")

|

||||||

268

README.md

268

README.md

@@ -1,74 +1,69 @@

|

|||||||

# AV Data Capture 日本AV元数据刮削器

|

# AV Data Capture

|

||||||

|

|

||||||

|

|

||||||

<a title="Hits" target="_blank" href="https://github.com/b3log/hits"><img src="https://hits.b3log.org/b3log/hits.svg"></a>

|

<a title="Hits" target="_blank" href="https://github.com/yoshiko2/AV_Data_Capture"><img src="https://hits.b3log.org/yoshiko2/AV_Data_Capture.svg"></a>

|

||||||

|

|

||||||

<br>

|

<br>

|

||||||

|

|

||||||

|

|

||||||

|

<br>

|

||||||

<br>

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

**日本电影元数据 抓取工具 | 刮削器**,配合本地影片管理软件EMBY,KODI管理本地影片,该软件起到分类与元数据抓取作用,利用元数据信息来分类,供本地影片分类整理使用。

|

||||||

|

|

||||||

# 目录

|

# 目录

|

||||||

* [前言](#前言)

|

|

||||||

* [捐助二维码](#捐助二维码)

|

|

||||||

* [效果图](#效果图)

|

|

||||||

* [免责声明](#免责声明)

|

* [免责声明](#免责声明)

|

||||||

|

* [注意](#注意)

|

||||||

|

* [你问我答 FAQ](#你问我答-faq)

|

||||||

|

* [效果图](#效果图)

|

||||||

* [如何使用](#如何使用)

|

* [如何使用](#如何使用)

|

||||||

* [下载](#下载)

|

* [下载](#下载)

|

||||||

* [简明教程](#简要教程)

|

* [简明教程](#简要教程)

|

||||||

* [模块安装](#1请安装模块在cmd终端逐条输入以下命令安装)

|

* [模块安装](#1请安装模块在cmd终端逐条输入以下命令安装)

|

||||||

* [配置](#2配置proxyini)

|

* [配置](#2配置configini)

|

||||||

* [运行软件](#4运行-av_data_capturepyexe)

|

* [(可选)设置自定义目录和影片重命名规则](#3可选设置自定义目录和影片重命名规则)

|

||||||

* [异常处理(重要)](#5异常处理重要)

|

* [运行软件](#5运行-av_data_capturepyexe)

|

||||||

* [导入至EMBY](#7把jav_output文件夹导入到embykodi中根据封面选片子享受手冲乐趣)

|

* [影片原路径处理](#4建议把软件拷贝和电影的统一目录下)

|

||||||

* [输出文件示例](#8输出的文件如下)

|

* [异常处理(重要)](#51异常处理重要)

|

||||||

|

* [导入至媒体库](#7把jav_output文件夹导入到embykodi中等待元数据刷新完成)

|

||||||

|

* [关于群晖NAS](#8关于群晖NAS)

|

||||||

* [写在后面](#9写在后面)

|

* [写在后面](#9写在后面)

|

||||||

* [软件流程图](#10软件流程图)

|

|

||||||

* []()

|

|

||||||

# 前言

|

|

||||||

目前,我下的AV越来越多,也意味着AV要**集中地管理**,形成本地媒体库。现在有两款主流的AV元数据获取器,"EverAver"和"Javhelper"。前者的优点是元数据获取比较全,缺点是不能批量处理;后者优点是可以批量处理,但是元数据不够全。<br>

|

|

||||||

为此,综合上述软件特点,我写出了本软件,为了方便的管理本地AV,和更好的手冲体验。<br>

|

|

||||||

希望大家可以认真耐心地看完本文档,你的耐心换来的是完美的管理方式。<br>

|

|

||||||

本软件更新可能比较**频繁**,麻烦诸位用户**积极更新新版本**以获得**最佳体验**。

|

|

||||||

|

|

||||||

**可以结合pockies大神的[ 打造本地AV(毛片)媒体库 ](https://pockies.github.io/2019/03/25/everaver-emby-kodi/)看本文档**<br>

|

|

||||||

**tg官方电报群:[ 点击进群](https://t.me/AV_Data_Capture_Official)**<br>

|

|

||||||

**推荐用法: 使用该软件后,对于不能正常获取元数据的电影可以用[ Everaver ](http://everaver.blogspot.com/)来补救**<br>

|

|

||||||

暂不支持多P电影<br>

|

|

||||||

[回到目录](#目录)

|

|

||||||

|

|

||||||

|

|

||||||

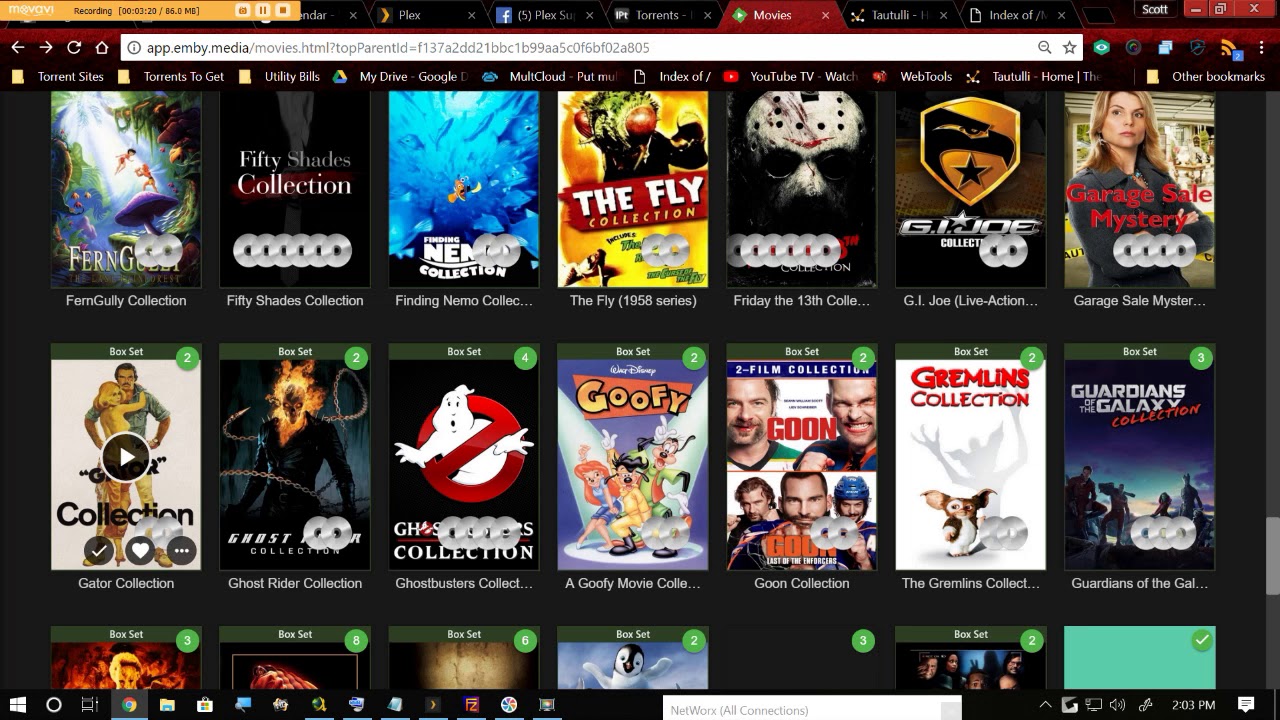

# 效果图

|

|

||||||

**由于法律因素,图片必须经马赛克处理**<br>

|

|

||||||

|

|

||||||

<br>

|

|

||||||

[回到目录](#目录)

|

|

||||||

|

|

||||||

# 捐助二维码

|

|

||||||

如果你觉得本软件好用,可以考虑捐助作者,多少钱无所谓,不强求,你的支持就是我的动力,非常感谢您的捐助

|

|

||||||

<br>

|

|

||||||

[回到目录](#目录)

|

|

||||||

|

|

||||||

# 免责声明

|

# 免责声明

|

||||||

1.本软件仅供技术交流,学术交流使用<br>

|

1.本软件仅供**技术交流,学术交流**使用,本项目旨在学习 Python3<br>

|

||||||

2.本软件不提供任何有关淫秽色情的影视下载方式<br>

|

2.本软件禁止用于任何非法用途<br>

|

||||||

3.使用者使用该软件产生的一切法律后果由使用者承担<br>

|

3.使用者使用该软件产生的一切法律后果由使用者承担<br>

|

||||||

4.该软件禁止任何商用行为<br>

|

4.不可使用于商业和个人其他意图<br>

|

||||||

[回到目录](#目录)

|

|

||||||

|

# 注意

|

||||||

|

**推荐用法: 使用该软件后,对于不能正常获取元数据的电影可以用 Everaver 来补救**<br>

|

||||||

|

暂不支持多P电影<br>

|

||||||

|

|

||||||

|

# 你问我答 FAQ

|

||||||

|

### F:这软件能下片吗?

|

||||||

|

**Q**:该软件不提供任何影片下载地址,仅供本地影片分类整理使用。

|

||||||

|

### F:什么是元数据?

|

||||||

|

**Q**:元数据包括了影片的:封面,导演,演员,简介,类型......

|

||||||

|

### F:软件收费吗?

|

||||||

|

**Q**:软件永久免费。除了 **作者** 钦点以外,给那些 **利用本软件牟利** 的人送上 **骨灰盒-全家族 | 崭新出厂**

|

||||||

|

### F:软件运行异常怎么办?

|

||||||

|

**Q**:认真看 [异常处理(重要)](#5异常处理重要)

|

||||||

|

|

||||||

|

# 效果图

|

||||||

|

**图片来自网络**,由于相关法律法规,具体效果请自行联想

|

||||||

|

|

||||||

|

<br>

|

||||||

|

|

||||||

# 如何使用

|

# 如何使用

|

||||||

### 下载

|

### 下载

|

||||||

* release的程序可脱离python环境运行,可跳过 [模块安装](#1请安装模块在cmd终端逐条输入以下命令安装)<br>下载地址(**仅限Windows**):https://github.com/wenead99/AV_Data_Capture/releases

|

* release的程序可脱离**python环境**运行,可跳过 [模块安装](#1请安装模块在cmd终端逐条输入以下命令安装)<br>Release 下载地址(**仅限Windows**):<br>[](https://github.com/yoshiko2/AV_Data_Capture/releases)<br>

|

||||||

* Linux,MacOS请下载源码包运行

|

* Linux,MacOS请下载源码包运行

|

||||||

|

|

||||||

|

* Windows Python环境:[点击前往](https://www.python.org/downloads/windows/) 选中executable installer下载

|

||||||

|

* MacOS Python环境:[点击前往](https://www.python.org/downloads/mac-osx/)

|

||||||

|

* Linux Python环境:Linux用户懂的吧,不解释下载地址

|

||||||

### 简要教程:<br>

|

### 简要教程:<br>

|

||||||

**1.把软件拉到和电影的同一目录<br>2.设置ini文件的代理(路由器拥有自动代理功能的可以把proxy=后面内容去掉)<br>3.运行软件等待完成<br>4.把JAV_output导入至KODI,EMBY中。<br>详细请看以下教程**<br>

|

**1.把软件拉到和电影的同一目录<br>2.设置ini文件的代理(路由器拥有自动代理功能的可以把proxy=后面内容去掉)<br>3.运行软件等待完成<br>4.把JAV_output导入至KODI,EMBY中。<br>详细请看以下教程**<br>

|

||||||

[回到目录](#目录)

|

|

||||||

|

|

||||||

## 1.请安装模块,在CMD/终端逐条输入以下命令安装

|

## 1.请安装模块,在CMD/终端逐条输入以下命令安装

|

||||||

```python

|

```python

|

||||||

@@ -92,20 +87,96 @@ pip install pillow

|

|||||||

```

|

```

|

||||||

###

|

###

|

||||||

|

|

||||||

|

## 2.配置config.ini

|

||||||

|

config.ini

|

||||||

|

>[common]<br>

|

||||||

|

>main_mode=1<br>

|

||||||

|

>failed_output_folder=failed<br>

|

||||||

|

>success_output_folder=JAV_output<br>

|

||||||

|

>

|

||||||

|

>[proxy]<br>

|

||||||

|

>proxy=127.0.0.1:1080<br>

|

||||||

|

>timeout=10<br>

|

||||||

|

>retry=3<br>

|

||||||

|

>

|

||||||

|

>[Name_Rule]<br>

|

||||||

|

>location_rule=actor+'/'+number<br>

|

||||||

|

>naming_rule=number+'-'+title<br>

|

||||||

|

>

|

||||||

|

>[update]<br>

|

||||||

|

>update_check=1<br>

|

||||||

|

>

|

||||||

|

>[media]<br>

|

||||||

|

>media_warehouse=emby<br>

|

||||||

|

>#emby or plex<br>

|

||||||

|

>

|

||||||

|

>[directory_capture]<br>

|

||||||

|

>directory=<br>

|

||||||

|

|

||||||

[回到目录](#目录)

|

### 全局设置

|

||||||

|

---

|

||||||

|

#### 软件模式

|

||||||

|

>[common]<br>

|

||||||

|

>main_mode=1<br>

|

||||||

|

|

||||||

## 2.配置proxy.ini

|

1为普通模式,2为整理模式:仅根据女优把电影命名为番号并分类到女优名称的文件夹下

|

||||||

#### 1.针对网络审查国家或地区的代理设置

|

|

||||||

打开```proxy.ini```,在```[proxy]```下的```proxy```行设置本地代理地址和端口,支持Shadowsocks/R,V2RAY本地代理端口:<br>

|

>failed_output_folder=failed<br>

|

||||||

|

>success_output_folder=JAV_outputd<br>

|

||||||

|

|

||||||

|

设置成功输出目录和失败输出目录

|

||||||

|

|

||||||

|

---

|

||||||

|

### 网络设置

|

||||||

|

#### * 针对“某些地区”的代理设置

|

||||||

|

打开```config.ini```,在```[proxy]```下的```proxy```行设置本地代理地址和端口,支持Shadowxxxx/X,V2XXX本地代理端口:<br>

|

||||||

例子:```proxy=127.0.0.1:1080```<br>素人系列抓取建议使用日本代理<br>

|

例子:```proxy=127.0.0.1:1080```<br>素人系列抓取建议使用日本代理<br>

|

||||||

**(路由器拥有自动代理功能的可以把proxy=后面内容去掉)**<br>

|

**路由器拥有自动代理功能的可以把proxy=后面内容去掉**<br>

|

||||||

|

**本地代理软件开全局模式的同志同上**<br>

|

||||||

**如果遇到tineout错误,可以把文件的proxy=后面的地址和端口删除,并开启vpn全局模式,或者重启电脑,vpn,网卡**<br>

|

**如果遇到tineout错误,可以把文件的proxy=后面的地址和端口删除,并开启vpn全局模式,或者重启电脑,vpn,网卡**<br>

|

||||||

[回到目录](#目录)

|

#### 连接超时重试设置

|

||||||

|

>[proxy]<br>

|

||||||

|

>timeout=10<br>

|

||||||

|

|

||||||

#### 2.(可选)设置自定义目录和影片重命名规则

|

10为超时重试时间 单位:秒

|

||||||

**已有默认配置**<br>

|

|

||||||

##### 命名参数<br>

|

---

|

||||||

|

#### 连接重试次数设置

|

||||||

|

>[proxy]<br>

|

||||||

|

>retry=3<br>

|

||||||

|

|

||||||

|

3即为重试次数

|

||||||

|

|

||||||

|

---

|

||||||

|

#### 检查更新开关

|

||||||

|

>[update]<br>

|

||||||

|

>update_check=1<br>

|

||||||

|

|

||||||

|

0为关闭,1为开启,不建议关闭

|

||||||

|

|

||||||

|

---

|

||||||

|

##### 媒体库选择

|

||||||

|

>[media]<br>

|

||||||

|

>media_warehouse=emby<br>

|

||||||

|

>#emby or plex<br>

|

||||||

|

|

||||||

|

可选择emby, plex<br>

|

||||||

|

如果是PLEX,请安装插件:```XBMCnfoMoviesImporter```

|

||||||

|

|

||||||

|

---

|

||||||

|

#### 抓取目录选择

|

||||||

|

>[directory_capture]<br>

|

||||||

|

>directory=<br>

|

||||||

|

如果directory后面为空,则抓取和程序同一目录下的影片,设置为``` * ```可抓取软件所在目录下的所有子目录中的影片

|

||||||

|

## 3.(可选)设置自定义目录和影片重命名规则

|

||||||

|

>[Name_Rule]<br>

|

||||||

|

>location_rule=actor+'/'+number<br>

|

||||||

|

>naming_rule=number+'-'+title<br>

|

||||||

|

|

||||||

|

已有默认配置

|

||||||

|

|

||||||

|

---

|

||||||

|

#### 命名参数

|

||||||

>title = 片名<br>

|

>title = 片名<br>

|

||||||

>actor = 演员<br>

|

>actor = 演员<br>

|

||||||

>studio = 公司<br>

|

>studio = 公司<br>

|

||||||

@@ -117,24 +188,49 @@ pip install pillow

|

|||||||

>tag = 类型<br>

|

>tag = 类型<br>

|

||||||

>outline = 简介<br>

|

>outline = 简介<br>

|

||||||

>runtime = 时长<br>

|

>runtime = 时长<br>

|

||||||

##### **例子**:<br>

|

|

||||||

>目录结构规则:location_rule='JAV_output/'+actor+'/'+number **不推荐修改目录结构规则,抓取数据时新建文件夹容易出错**<br>

|

上面的参数以下都称之为**变量**

|

||||||

>影片命名规则:naming_rule='['+number+']-'+title<br> **在EMBY,KODI等本地媒体库显示的标题**

|

|

||||||

[回到目录](#目录)

|

#### 例子:

|

||||||

## 3.把软件拷贝和AV的统一目录下

|

自定义规则方法:有两种元素,变量和字符,无论是任何一种元素之间连接必须要用加号 **+** ,比如:```'naming_rule=['+number+']-'+title```,其中冒号 ' ' 内的文字是字符,没有冒号包含的文字是变量,元素之间连接必须要用加号 **+** <br>

|

||||||

## 4.运行 ```AV_Data_capture.py/.exe```

|

目录结构规则:默认 ```location_rule=actor+'/'+number```<br> **不推荐修改时在这里添加title**,有时title过长,因为Windows API问题,抓取数据时新建文件夹容易出错。<br>

|

||||||

你也可以把单个影片拖动到core程序<br>

|

影片命名规则:默认 ```naming_rule=number+'-'+title```<br> **在EMBY,KODI等本地媒体库显示的标题,不影响目录结构下影片文件的命名**,依旧是 番号+后缀。

|

||||||

<br>

|

|

||||||

[回到目录](#目录)

|

---

|

||||||

## 5.异常处理(重要)

|

### 更新开关

|

||||||

|

>[update]<br>update_check=1<br>

|

||||||

|

1为开,0为关

|

||||||

|

## 4.建议把软件拷贝和电影的统一目录下

|

||||||

|

如果```config.ini```中```directory=```后面为空的情况下

|

||||||

|

## 5.运行 ```AV_Data_capture.py/.exe```

|

||||||

|

当文件名包含:<br>

|

||||||

|

中文,字幕,-c., -C., 处理元数据时会加上**中文字幕**标签

|

||||||

|

## 5.1 异常处理(重要)

|

||||||

|

### 请确保软件是完整地!确保ini文件内容是和下载提供ini文件内容的一致的!

|

||||||

|

---

|

||||||

|

### 关于软件打开就闪退

|

||||||

|

可以打开cmd命令提示符,把 ```AV_Data_capture.py/.exe```拖进cmd窗口回车运行,查看错误,出现的错误信息**依据以下条目解决**

|

||||||

|

|

||||||

|

---

|

||||||

|

### 关于 ```Updata_check``` 和 ```JSON``` 相关的错误

|

||||||

|

跳转 [网络设置](#网络设置)

|

||||||

|

|

||||||

|

---

|

||||||

|

### 关于```FileNotFoundError: [WinError 3] 系统找不到指定的路径。: 'JAV_output''```

|

||||||

|

在软件所在文件夹下新建 JAV_output 文件夹,可能是你没有把软件拉到和电影的同一目录

|

||||||

|

|

||||||

|

---

|

||||||

### 关于连接拒绝的错误

|

### 关于连接拒绝的错误

|

||||||

请设置好[代理](#1针对网络审查国家或地区的代理设置)<br>

|

请设置好[代理](#针对某些地区的代理设置)<br>

|

||||||

|

|

||||||

|

---

|

||||||

### 关于Nonetype,xpath报错

|

### 关于Nonetype,xpath报错

|

||||||

同上<br>

|

同上<br>

|

||||||

[回到目录](#目录)

|

|

||||||

|

---

|

||||||

### 关于番号提取失败或者异常

|

### 关于番号提取失败或者异常

|

||||||

**目前可以提取元素的影片:JAVBUS上有元数据的电影,素人系列:300Maan,259luxu,siro等,FC2系列**<br>

|

**目前可以提取元素的影片:JAVBUS上有元数据的电影,素人系列:300Maan,259luxu,siro等,FC2系列**<br>

|

||||||

>下一张图片来自Pockies的blog:https://pockies.github.io/2019/03/25/everaver-emby-kodi/ 原作者已授权<br>

|

>下一张图片来自Pockies的blog 原作者已授权<br>

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

@@ -144,26 +240,22 @@ pip install pillow

|

|||||||

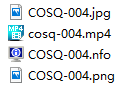

COSQ-004.mp4

|

COSQ-004.mp4

|

||||||

```

|

```

|

||||||

|

|

||||||

文件名中间要有下划线或者减号"_","-",没有多余的内容只有番号为最佳,可以让软件更好获取元数据

|

针对 **野鸡番号** ,你需要把文件名命名为与抓取网站提供的番号一致(文件拓展名除外),然后把文件拖拽至core.exe/.py<br>

|

||||||

|

**野鸡番号**:比如 ```XXX-XXX-1```, ```1301XX-MINA_YUKA``` 这种**野鸡**番号,在javbus等资料库存在的作品。<br>**重要**:除了 **影片文件名** ```XXXX-XXX-C```,后面这种-C的是指电影有中文字幕!<br>

|

||||||

|

条件:文件名中间要有下划线或者减号"_","-",没有多余的内容只有番号为最佳,可以让软件更好获取元数据

|

||||||

对于多影片重命名,可以用[ReNamer](http://www.den4b.com/products/renamer)来批量重命名<br>

|

对于多影片重命名,可以用[ReNamer](http://www.den4b.com/products/renamer)来批量重命名<br>

|

||||||

[回到目录](#目录)

|

|

||||||

|

---

|

||||||

|

### 关于PIL/image.py

|

||||||

|

暂时无解,可能是网络问题或者pillow模块打包问题,你可以用源码运行(要安装好第一步的模块)

|

||||||

|

|

||||||

|

|

||||||

## 6.软件会自动把元数据获取成功的电影移动到JAV_output文件夹中,根据女优分类,失败的电影移动到failed文件夹中。

|

## 6.软件会自动把元数据获取成功的电影移动到JAV_output文件夹中,根据演员分类,失败的电影移动到failed文件夹中。

|

||||||

## 7.把JAV_output文件夹导入到EMBY,KODI中,根据封面选片子,享受手冲乐趣

|

## 7.把JAV_output文件夹导入到EMBY,KODI中,等待元数据刷新,完成

|

||||||

cookies大神的EMBY教程:[链接](https://pockies.github.io/2019/03/25/everaver-emby-kodi/#%E5%AE%89%E8%A3%85emby%E5%B9%B6%E6%B7%BB%E5%8A%A0%E5%AA%92%E4%BD%93%E5%BA%93)<br>

|

## 8.关于群晖NAS

|

||||||

[回到目录](#目录)

|

开启SMB在Windows上挂载为网络磁盘即可使用本软件,也适用于其他NAS

|

||||||

## 8.输出的文件如下

|

|

||||||

|

|

||||||

|

|

||||||

<br>

|

|

||||||

[回到目录](#目录)

|

|

||||||

## 9.写在后面

|

## 9.写在后面

|

||||||

怎么样,看着自己的AV被这样完美地管理,是不是感觉成就感爆棚呢?<br>

|

怎么样,看着自己的日本电影被这样完美地管理,是不是感觉成就感爆棚呢?<br>

|

||||||

[回到目录](#目录)

|

**tg官方电报群:[ 点击进群](https://t.me/AV_Data_Capture_Official)**<br>

|

||||||

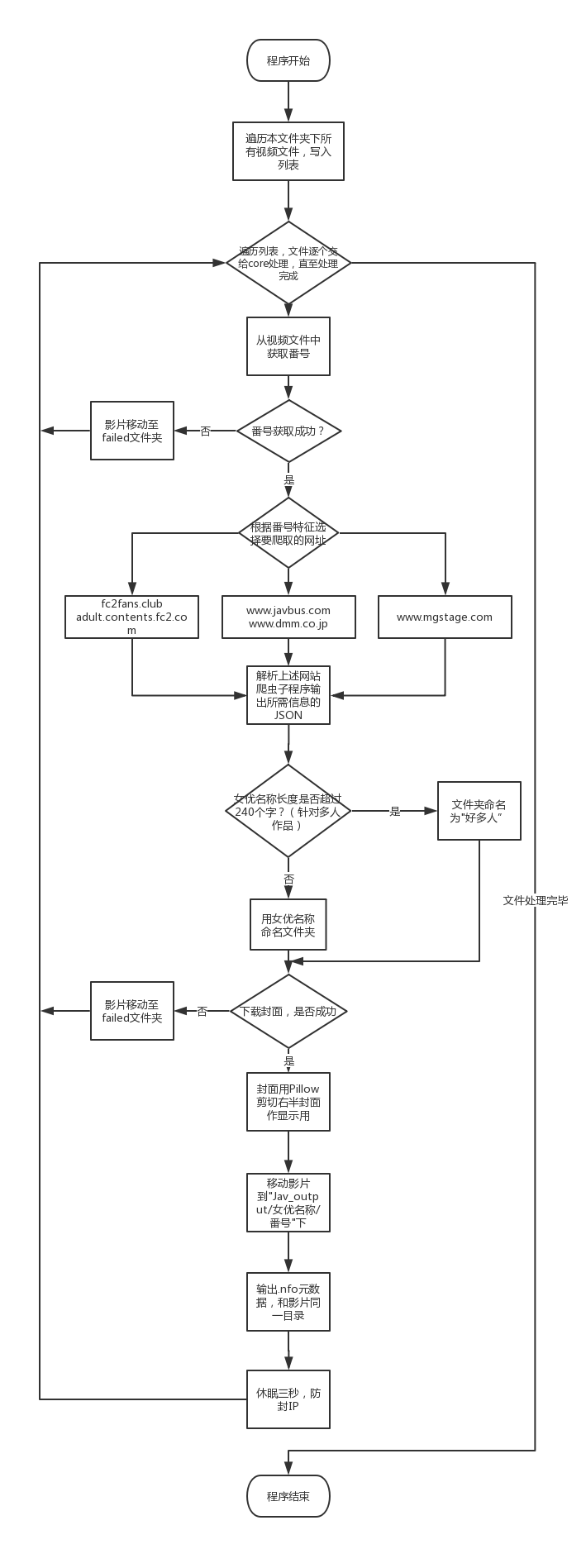

## 10.软件流程图

|

|

||||||

<br>

|

|

||||||

[回到目录](#目录)

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

112

avsox.py

Normal file

112

avsox.py

Normal file

@@ -0,0 +1,112 @@

|

|||||||

|

import re

|

||||||

|

from lxml import etree

|

||||||

|

import json

|

||||||

|

from bs4 import BeautifulSoup

|

||||||

|

from ADC_function import *

|

||||||

|

|

||||||

|

def getActorPhoto(htmlcode): #//*[@id="star_qdt"]/li/a/img

|

||||||

|

soup = BeautifulSoup(htmlcode, 'lxml')

|

||||||

|

a = soup.find_all(attrs={'class': 'avatar-box'})

|

||||||

|

d = {}

|

||||||

|

for i in a:

|

||||||

|

l = i.img['src']

|

||||||

|

t = i.span.get_text()

|

||||||

|

p2 = {t: l}

|

||||||

|

d.update(p2)

|

||||||

|

return d

|

||||||

|

def getTitle(a):

|

||||||

|

try:

|

||||||

|

html = etree.fromstring(a, etree.HTMLParser())

|

||||||

|

result = str(html.xpath('/html/body/div[2]/h3/text()')).strip(" ['']") #[0]

|

||||||

|

return result.replace('/', '')

|

||||||

|

except:

|

||||||

|

return ''

|

||||||

|

def getActor(a): #//*[@id="center_column"]/div[2]/div[1]/div/table/tbody/tr[1]/td/text()

|

||||||

|

soup = BeautifulSoup(a, 'lxml')

|

||||||

|

a = soup.find_all(attrs={'class': 'avatar-box'})

|

||||||

|

d = []

|

||||||

|

for i in a:

|

||||||

|

d.append(i.span.get_text())

|

||||||

|

return d

|

||||||

|

def getStudio(a):

|

||||||

|

html = etree.fromstring(a, etree.HTMLParser()) # //table/tr[1]/td[1]/text()

|

||||||

|

result1 = str(html.xpath('//p[contains(text(),"制作商: ")]/following-sibling::p[1]/a/text()')).strip(" ['']").replace("', '",' ')

|

||||||

|

return result1

|

||||||

|

def getRuntime(a):

|

||||||

|

html = etree.fromstring(a, etree.HTMLParser()) # //table/tr[1]/td[1]/text()

|

||||||

|

result1 = str(html.xpath('//span[contains(text(),"长度:")]/../text()')).strip(" ['分钟']")

|

||||||

|

return result1

|

||||||

|

def getLabel(a):

|

||||||

|

html = etree.fromstring(a, etree.HTMLParser()) # //table/tr[1]/td[1]/text()

|

||||||

|

result1 = str(html.xpath('//p[contains(text(),"系列:")]/following-sibling::p[1]/a/text()')).strip(" ['']")

|

||||||

|

return result1

|

||||||

|

def getNum(a):

|

||||||

|

html = etree.fromstring(a, etree.HTMLParser()) # //table/tr[1]/td[1]/text()

|

||||||

|

result1 = str(html.xpath('//span[contains(text(),"识别码:")]/../span[2]/text()')).strip(" ['']")

|

||||||

|

return result1

|

||||||

|

def getYear(release):

|

||||||

|

try:

|

||||||

|

result = str(re.search('\d{4}',release).group())

|

||||||

|

return result

|

||||||

|

except:

|

||||||

|

return release

|

||||||

|

def getRelease(a):

|

||||||

|

html = etree.fromstring(a, etree.HTMLParser()) # //table/tr[1]/td[1]/text()

|

||||||

|

result1 = str(html.xpath('//span[contains(text(),"发行时间:")]/../text()')).strip(" ['']")

|

||||||

|

return result1

|

||||||

|

def getCover(htmlcode):

|

||||||

|

html = etree.fromstring(htmlcode, etree.HTMLParser())

|

||||||

|

result = str(html.xpath('/html/body/div[2]/div[1]/div[1]/a/img/@src')).strip(" ['']")

|

||||||

|

return result

|

||||||

|

def getCover_small(htmlcode):

|

||||||

|

html = etree.fromstring(htmlcode, etree.HTMLParser())

|

||||||

|

result = str(html.xpath('//*[@id="waterfall"]/div/a/div[1]/img/@src')).strip(" ['']")

|

||||||

|

return result

|

||||||

|

def getTag(a): # 获取演员

|

||||||

|

soup = BeautifulSoup(a, 'lxml')

|

||||||

|

a = soup.find_all(attrs={'class': 'genre'})

|

||||||

|

d = []

|

||||||

|

for i in a:

|

||||||

|

d.append(i.get_text())

|

||||||

|

return d

|

||||||

|

|

||||||

|

def main(number):

|

||||||

|

a = get_html('https://avsox.asia/cn/search/' + number)

|

||||||

|

html = etree.fromstring(a, etree.HTMLParser()) # //table/tr[1]/td[1]/text()

|

||||||

|

result1 = str(html.xpath('//*[@id="waterfall"]/div/a/@href')).strip(" ['']")

|

||||||

|

if result1 == '' or result1 == 'null' or result1 == 'None':

|

||||||

|

a = get_html('https://avsox.asia/cn/search/' + number.replace('-', '_'))

|

||||||

|

print(a)

|

||||||

|

html = etree.fromstring(a, etree.HTMLParser()) # //table/tr[1]/td[1]/text()

|

||||||

|

result1 = str(html.xpath('//*[@id="waterfall"]/div/a/@href')).strip(" ['']")

|

||||||

|

if result1 == '' or result1 == 'null' or result1 == 'None':

|

||||||

|

a = get_html('https://avsox.asia/cn/search/' + number.replace('_', ''))

|

||||||

|

print(a)

|

||||||

|

html = etree.fromstring(a, etree.HTMLParser()) # //table/tr[1]/td[1]/text()

|

||||||

|

result1 = str(html.xpath('//*[@id="waterfall"]/div/a/@href')).strip(" ['']")

|

||||||

|

web = get_html(result1)

|

||||||

|

soup = BeautifulSoup(web, 'lxml')

|

||||||

|

info = str(soup.find(attrs={'class': 'row movie'}))

|

||||||

|

dic = {

|

||||||

|

'actor': getActor(web),

|

||||||

|

'title': getTitle(web).strip(getNum(web)),

|

||||||

|

'studio': getStudio(info),

|

||||||

|

'outline': '',#

|

||||||

|

'runtime': getRuntime(info),

|

||||||

|

'director': '', #

|

||||||

|

'release': getRelease(info),

|

||||||

|

'number': getNum(info),

|

||||||

|

'cover': getCover(web),

|

||||||

|

'cover_small': getCover_small(a),

|

||||||

|

'imagecut': 3,

|

||||||

|

'tag': getTag(web),

|

||||||

|

'label': getLabel(info),

|

||||||

|

'year': getYear(getRelease(info)), # str(re.search('\d{4}',getRelease(a)).group()),

|

||||||

|

'actor_photo': getActorPhoto(web),

|

||||||

|

'website': result1,

|

||||||

|

'source': 'avsox.py',

|

||||||

|

}

|

||||||

|

js = json.dumps(dic, ensure_ascii=False, sort_keys=True, indent=4, separators=(',', ':'), ) # .encode('UTF-8')

|

||||||

|

return js

|

||||||

|

|

||||||

|

#print(main('041516_541'))

|

||||||

23

config.ini

Normal file

23

config.ini

Normal file

@@ -0,0 +1,23 @@

|

|||||||

|

[common]

|

||||||

|

main_mode=1

|

||||||

|

failed_output_folder=failed

|

||||||

|

success_output_folder=JAV_output

|

||||||

|

|

||||||

|

[proxy]

|

||||||

|

proxy=127.0.0.1:1080

|

||||||

|

timeout=10

|

||||||

|

retry=3

|

||||||

|

|

||||||

|

[Name_Rule]

|

||||||

|

location_rule=actor+'/'+number

|

||||||

|

naming_rule=number+'-'+title

|

||||||

|

|

||||||

|

[update]

|

||||||

|

update_check=1

|

||||||

|

|

||||||

|

[media]

|

||||||

|

media_warehouse=emby

|

||||||

|

#emby or plex

|

||||||

|

|

||||||

|

[directory_capture]

|

||||||

|

directory=

|

||||||

435

core.py

Normal file → Executable file

435

core.py

Normal file → Executable file

@@ -1,15 +1,29 @@

|

|||||||

|

# -*- coding: utf-8 -*-

|

||||||

|

|

||||||

import re

|

import re

|

||||||

import os

|

import os

|

||||||

import os.path

|

import os.path

|

||||||

import shutil

|

import shutil

|

||||||

from PIL import Image

|

from PIL import Image

|

||||||

import time

|

import time

|

||||||

import javbus

|

|

||||||

import json

|

import json

|

||||||

import fc2fans_club

|

|

||||||

import siro

|

|

||||||

from ADC_function import *

|

from ADC_function import *

|

||||||

from configparser import ConfigParser

|

from configparser import ConfigParser

|

||||||

|

import argparse

|

||||||

|

#=========website========

|

||||||

|

import fc2fans_club

|

||||||

|

import siro

|

||||||

|

import avsox

|

||||||

|

import javbus

|

||||||

|

import javdb

|

||||||

|

#=========website========

|

||||||

|

|

||||||

|

Config = ConfigParser()

|

||||||

|

Config.read(config_file, encoding='UTF-8')

|

||||||

|

try:

|

||||||

|

option = ReadMediaWarehouse()

|

||||||

|

except:

|

||||||

|

print('[-]Config media_warehouse read failed!')

|

||||||

|

|

||||||

#初始化全局变量

|

#初始化全局变量

|

||||||

title=''

|

title=''

|

||||||

@@ -25,23 +39,43 @@ number=''

|

|||||||

cover=''

|

cover=''

|

||||||

imagecut=''

|

imagecut=''

|

||||||

tag=[]

|

tag=[]

|

||||||

|

cn_sub=''

|

||||||

|

path=''

|

||||||

|

houzhui=''

|

||||||

|

website=''

|

||||||

|

json_data={}

|

||||||

|

actor_photo={}

|

||||||

|

cover_small=''

|

||||||

naming_rule =''#eval(config['Name_Rule']['naming_rule'])

|

naming_rule =''#eval(config['Name_Rule']['naming_rule'])

|

||||||

location_rule=''#eval(config['Name_Rule']['location_rule'])

|

location_rule=''#eval(config['Name_Rule']['location_rule'])

|

||||||

|

program_mode = Config['common']['main_mode']

|

||||||

|

failed_folder= Config['common']['failed_output_folder']

|

||||||

|

success_folder=Config['common']['success_output_folder']

|

||||||

#=====================本地文件处理===========================

|

#=====================本地文件处理===========================

|

||||||

|

def moveFailedFolder():

|

||||||

|

global filepath

|

||||||

|

print('[-]Move to Failed output folder')

|

||||||

|

shutil.move(filepath, str(os.getcwd()) + '/' + failed_folder + '/')

|

||||||

|

os._exit(0)

|

||||||

def argparse_get_file():

|

def argparse_get_file():

|

||||||

import argparse

|

|

||||||

parser = argparse.ArgumentParser()

|

parser = argparse.ArgumentParser()

|

||||||

|

parser.add_argument("--number", help="Enter Number on here", default='')

|

||||||

parser.add_argument("file", help="Write the file path on here")

|

parser.add_argument("file", help="Write the file path on here")

|

||||||

args = parser.parse_args()

|

args = parser.parse_args()

|

||||||

return args.file

|

return (args.file, args.number)

|

||||||

def CreatFailedFolder():

|

def CreatFailedFolder():

|

||||||

if not os.path.exists('failed/'): # 新建failed文件夹

|

if not os.path.exists(failed_folder+'/'): # 新建failed文件夹

|

||||||

try:

|

try:

|

||||||

os.makedirs('failed/')

|

os.makedirs(failed_folder+'/')

|

||||||

except:

|

except:

|

||||||

print("[-]failed!can not be make folder 'failed'\n[-](Please run as Administrator)")

|

print("[-]failed!can not be make Failed output folder\n[-](Please run as Administrator)")

|

||||||

os._exit(0)

|

os._exit(0)

|

||||||

def getNumberFromFilename(filepath):

|

def getDataState(json_data): #元数据获取失败检测

|

||||||

|

if json_data['title'] == '' or json_data['title'] == 'None' or json_data['title'] == 'null':

|

||||||

|

return 0

|

||||||

|

else:

|

||||||

|

return 1

|

||||||

|

def getDataFromJSON(file_number): #从JSON返回元数据

|

||||||

global title

|

global title

|

||||||

global studio

|

global studio

|

||||||

global year

|

global year

|

||||||

@@ -56,171 +90,203 @@ def getNumberFromFilename(filepath):

|

|||||||

global imagecut

|

global imagecut

|

||||||

global tag

|

global tag

|

||||||

global image_main

|

global image_main

|

||||||

|

global cn_sub

|

||||||

|

global website

|

||||||

|

global actor_photo

|

||||||

|

global cover_small

|

||||||

|

|

||||||

global naming_rule

|

global naming_rule

|

||||||

global location_rule

|

global location_rule

|

||||||

|

|

||||||

#================================================获取文件番号================================================

|

|

||||||

try: #试图提取番号

|

|

||||||

# ====番号获取主程序====

|

|

||||||

try: # 普通提取番号 主要处理包含减号-的番号

|

|

||||||

filepath.strip('22-sht.me').strip('-HD').strip('-hd')

|

|

||||||

filename = str(re.sub("\[\d{4}-\d{1,2}-\d{1,2}\] - ", "", filepath)) # 去除文件名中文件名

|

|

||||||

file_number = re.search('\w+-\d+', filename).group()

|

|

||||||

except: # 提取不含减号-的番号

|

|

||||||

try: # 提取东京热番号格式 n1087

|

|

||||||

filename1 = str(re.sub("h26\d", "", filepath)).strip('Tokyo-hot').strip('tokyo-hot')

|

|

||||||

filename0 = str(re.sub(".*?\.com-\d+", "", filename1)).strip('_')

|

|

||||||

file_number = str(re.search('n\d{4}', filename0).group(0))

|

|

||||||

except: # 提取无减号番号

|

|

||||||

filename1 = str(re.sub("h26\d", "", filepath)) # 去除h264/265

|

|

||||||

filename0 = str(re.sub(".*?\.com-\d+", "", filename1))

|

|

||||||

file_number2 = str(re.match('\w+', filename0).group())

|

|

||||||

file_number = str(file_number2.replace(re.match("^[A-Za-z]+", file_number2).group(),re.match("^[A-Za-z]+", file_number2).group() + '-'))

|

|

||||||

#if not re.search('\w-', file_number).group() == 'None':

|

|

||||||

#file_number = re.search('\w+-\w+', filename).group()

|

|

||||||

#上面是插入减号-到番号中

|

|

||||||

print("[!]Making Data for [" + filename + "],the number is [" + file_number + "]")

|

|

||||||

# ====番号获取主程序=结束===

|

|

||||||

except Exception as e: #番号提取异常

|

|

||||||

print('[-]'+str(os.path.basename(filepath))+' Cannot catch the number :')

|

|

||||||

print('[-]' + str(os.path.basename(filepath)) + ' :', e)

|

|

||||||

print('[-]Move ' + os.path.basename(filepath) + ' to failed folder')

|

|

||||||

shutil.move(filepath, str(os.getcwd()) + '/' + 'failed/')

|

|

||||||

os._exit(0)

|

|

||||||

except IOError as e2:

|

|

||||||

print('[-]' + str(os.path.basename(filepath)) + ' Cannot catch the number :')

|

|

||||||

print('[-]' + str(os.path.basename(filepath)) + ' :',e2)

|

|

||||||

print('[-]Move ' + os.path.basename(filepath) + ' to failed folder')

|

|

||||||

shutil.move(filepath, str(os.getcwd()) + '/' + 'failed/')

|

|

||||||

os._exit(0)

|

|

||||||

try:

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

# ================================================网站规则添加开始================================================

|

# ================================================网站规则添加开始================================================

|

||||||

|

|

||||||

try: # 添加 需要 正则表达式的规则

|

try: # 添加 需要 正则表达式的规则

|

||||||

#=======================javbus.py=======================

|

if re.search('^\d{5,}', file_number).group() in file_number:

|

||||||

if re.search('^\d{5,}', file_number).group() in filename:

|

json_data = json.loads(avsox.main(file_number))

|

||||||

json_data = json.loads(javbus.main_uncensored(file_number))

|

if getDataState(json_data) == 0: #如果元数据获取失败,请求番号至其他网站抓取

|

||||||

|

json_data = json.loads(javdb.main(file_number))

|

||||||

|

|

||||||

|

elif re.search('\d+\D+', file_number).group() in file_number:

|

||||||

|

json_data = json.loads(siro.main(file_number))

|

||||||

|

if getDataState(json_data) == 0: #如果元数据获取失败,请求番号至其他网站抓取

|

||||||

|

json_data = json.loads(javbus.main(file_number))

|

||||||

|

elif getDataState(json_data) == 0: #如果元数据获取失败,请求番号至其他网站抓取

|

||||||

|

json_data = json.loads(javdb.main(file_number))

|

||||||

|

|

||||||

except: # 添加 无需 正则表达式的规则

|

except: # 添加 无需 正则表达式的规则

|

||||||

# ====================fc2fans_club.py===================

|

if 'fc2' in file_number:

|

||||||

if 'fc2' in filename:

|

|

||||||

json_data = json.loads(fc2fans_club.main(file_number.strip('fc2_').strip('fc2-').strip('ppv-').strip('PPV-').strip('FC2_').strip('FC2-').strip('ppv-').strip('PPV-')))

|

json_data = json.loads(fc2fans_club.main(file_number.strip('fc2_').strip('fc2-').strip('ppv-').strip('PPV-').strip('FC2_').strip('FC2-').strip('ppv-').strip('PPV-')))

|

||||||

elif 'FC2' in filename:

|

elif 'FC2' in file_number:

|

||||||

json_data = json.loads(fc2fans_club.main(file_number.strip('FC2_').strip('FC2-').strip('ppv-').strip('PPV-').strip('fc2_').strip('fc2-').strip('ppv-').strip('PPV-')))

|

json_data = json.loads(fc2fans_club.main(file_number.strip('FC2_').strip('FC2-').strip('ppv-').strip('PPV-').strip('fc2_').strip('fc2-').strip('ppv-').strip('PPV-')))

|

||||||

#print(file_number.strip('FC2_').strip('FC2-').strip('ppv-').strip('PPV-'))

|

elif 'HEYZO' in number or 'heyzo' in number or 'Heyzo' in number:

|

||||||

#=======================javbus.py=======================

|

json_data = json.loads(avsox.main(file_number))

|

||||||

|

elif 'siro' in file_number or 'SIRO' in file_number or 'Siro' in file_number:

|

||||||

|

json_data = json.loads(siro.main(file_number))

|

||||||

else:

|

else:

|

||||||

json_data = json.loads(javbus.main(file_number))

|

json_data = json.loads(javbus.main(file_number))

|

||||||

|

if getDataState(json_data) == 0: #如果元数据获取失败,请求番号至其他网站抓取

|

||||||

|

json_data = json.loads(avsox.main(file_number))

|

||||||

|

elif getDataState(json_data) == 0: #如果元数据获取失败,请求番号至其他网站抓取

|

||||||

|

json_data = json.loads(javdb.main(file_number))

|

||||||

|

|

||||||

# ================================================网站规则添加结束================================================

|

# ================================================网站规则添加结束================================================

|

||||||

|

|

||||||

|

title = str(json_data['title']).replace(' ','')

|

||||||

|

|

||||||

|

|

||||||

title = json_data['title']

|

|

||||||

studio = json_data['studio']

|

studio = json_data['studio']

|

||||||

year = json_data['year']

|

year = json_data['year']

|

||||||

outline = json_data['outline']

|

outline = json_data['outline']

|

||||||

runtime = json_data['runtime']

|

runtime = json_data['runtime']

|

||||||

director = json_data['director']

|

director = json_data['director']

|

||||||

actor_list = str(json_data['actor']).strip("[ ]").replace("'",'').replace(" ",'').split(',') #字符串转列表

|

actor_list = str(json_data['actor']).strip("[ ]").replace("'", '').split(',') # 字符串转列表

|

||||||

release = json_data['release']

|

release = json_data['release']

|

||||||

number = json_data['number']

|

number = json_data['number']

|

||||||

cover = json_data['cover']

|

cover = json_data['cover']

|

||||||

|

try:

|

||||||

|

cover_small = json_data['cover_small']

|

||||||

|

except:

|

||||||

|

aaaaaaa=''

|

||||||

imagecut = json_data['imagecut']

|

imagecut = json_data['imagecut']

|

||||||

tag = str(json_data['tag']).strip("[ ]").replace("'", '').replace(" ", '').split(',') # 字符串转列表

|

tag = str(json_data['tag']).strip("[ ]").replace("'", '').replace(" ", '').split(',') # 字符串转列表

|

||||||

actor = str(actor_list).strip("[ ]").replace("'", '').replace(" ", '')

|

actor = str(actor_list).strip("[ ]").replace("'", '').replace(" ", '')

|

||||||

|

actor_photo = json_data['actor_photo']

|

||||||

|

website = json_data['website']

|

||||||

|

source = json_data['source']

|

||||||

|

|

||||||

|

if title == '' or number == '':

|

||||||

|

print('[-]Movie Data not found!')

|

||||||

|

moveFailedFolder()

|

||||||

|

|

||||||

|

if imagecut == '3':

|

||||||

|

DownloadFileWithFilename()

|

||||||

|

|

||||||

|

|

||||||

# ====================处理异常字符====================== #\/:*?"<>|

|

# ====================处理异常字符====================== #\/:*?"<>|

|

||||||

#if "\\" in title or "/" in title or ":" in title or "*" in title or "?" in title or '"' in title or '<' in title or ">" in title or "|" in title or len(title) > 200:

|

if '\\' in title:

|

||||||

# title = title.

|

title=title.replace('\\', ' ')

|

||||||

|

elif r'/' in title:

|

||||||

|

title=title.replace(r'/', '')

|

||||||

|

elif ':' in title:

|

||||||

|

title=title.replace(':', '')

|

||||||

|

elif '*' in title:

|

||||||

|

title=title.replace('*', '')

|

||||||

|

elif '?' in title:

|

||||||

|

title=title.replace('?', '')

|

||||||

|

elif '"' in title:

|

||||||

|

title=title.replace('"', '')

|

||||||

|

elif '<' in title:

|

||||||

|

title=title.replace('<', '')

|

||||||

|

elif '>' in title:

|

||||||

|

title=title.replace('>', '')

|

||||||

|

elif '|' in title:

|

||||||

|

title=title.replace('|', '')

|

||||||

|

# ====================处理异常字符 END================== #\/:*?"<>|

|

||||||

|

|

||||||

naming_rule = eval(config['Name_Rule']['naming_rule'])

|

naming_rule = eval(config['Name_Rule']['naming_rule'])

|

||||||

location_rule = eval(config['Name_Rule']['location_rule'])

|

location_rule = eval(config['Name_Rule']['location_rule'])

|

||||||

except IOError as e:

|

def smallCoverCheck():

|

||||||

print('[-]'+str(e))

|

if imagecut == 3:

|

||||||

print('[-]Move ' + filename + ' to failed folder')

|

if option == 'emby':

|

||||||

shutil.move(filepath, str(os.getcwd())+'/'+'failed/')

|

DownloadFileWithFilename(cover_small, '1.jpg', path)

|

||||||

os._exit(0)

|

img = Image.open(path + '/1.jpg')

|

||||||

|

w = img.width

|

||||||

except Exception as e:

|

h = img.height

|

||||||

print('[-]'+str(e))

|

img.save(path + '/' + number + '.png')

|

||||||

print('[-]Move ' + filename + ' to failed folder')

|

time.sleep(1)

|

||||||

shutil.move(filepath, str(os.getcwd())+'/'+'failed/')

|

os.remove(path + '/1.jpg')

|

||||||

os._exit(0)

|

if option == 'plex':

|

||||||

path = '' #设置path为全局变量,后面移动文件要用

|

DownloadFileWithFilename(cover_small, '1.jpg', path)

|

||||||

def creatFolder():

|

img = Image.open(path + '/1.jpg')

|

||||||

|

w = img.width

|

||||||

|

h = img.height

|

||||||

|

img.save(path + '/poster.png')

|

||||||

|

os.remove(path + '/1.jpg')

|

||||||

|

def creatFolder(): #创建文件夹

|

||||||

global actor

|

global actor

|

||||||

global path

|

global path

|

||||||

if len(actor) > 240: #新建成功输出文件夹

|

if len(actor) > 240: #新建成功输出文件夹

|

||||||

path = location_rule.replace("'actor'","'超多人'",3).replace("actor","'超多人'",3) #path为影片+元数据所在目录

|

path = success_folder+'/'+location_rule.replace("'actor'","'超多人'",3).replace("actor","'超多人'",3) #path为影片+元数据所在目录

|

||||||

#print(path)

|

#print(path)

|

||||||

else:

|

else:

|

||||||

path = location_rule

|

path = success_folder+'/'+location_rule

|

||||||

#print(path)

|

#print(path)

|

||||||

if not os.path.exists(path):

|

if not os.path.exists(path):

|

||||||

try:

|

try:

|

||||||

os.makedirs(path)

|

os.makedirs(path)

|

||||||

except:

|

except:

|

||||||

path = location_rule.replace(actor,"'其他'")

|

path = success_folder+'/'+location_rule.replace('/['+number+']-'+title,"/number")

|

||||||

|

#print(path)

|

||||||

os.makedirs(path)

|

os.makedirs(path)

|

||||||

|

|

||||||

|

|

||||||

#=====================资源下载部分===========================

|

#=====================资源下载部分===========================

|

||||||

def DownloadFileWithFilename(url,filename,path): #path = examle:photo , video.in the Project Folder!

|

def DownloadFileWithFilename(url,filename,path): #path = examle:photo , video.in the Project Folder!

|

||||||

config = ConfigParser()

|

|

||||||

config.read('proxy.ini', encoding='UTF-8')

|

|

||||||

proxy = str(config['proxy']['proxy'])

|

|

||||||

if not str(config['proxy']['proxy']) == '':

|

|

||||||

try:

|

try:

|

||||||

|

proxy = Config['proxy']['proxy']

|

||||||

|

timeout = int(Config['proxy']['timeout'])

|

||||||

|

retry_count = int(Config['proxy']['retry'])

|

||||||

|

except:

|

||||||

|

print('[-]Proxy config error! Please check the config.')

|

||||||

|

i = 0

|

||||||

|

|

||||||

|